This the multi-page printable view of this section. Click here to print.

Documentation

- 1: Overview

- 2: Getting Started

- 2.1: First time users

- 2.2: Apache Installation

- 2.3: Docker Installation

- 2.4: Customize Installation

- 3: API services

- 3.1: Quick reference

- 3.2: GET data

- 3.3: POST data

- 3.4: Update data - PUT

- 4: Concepts

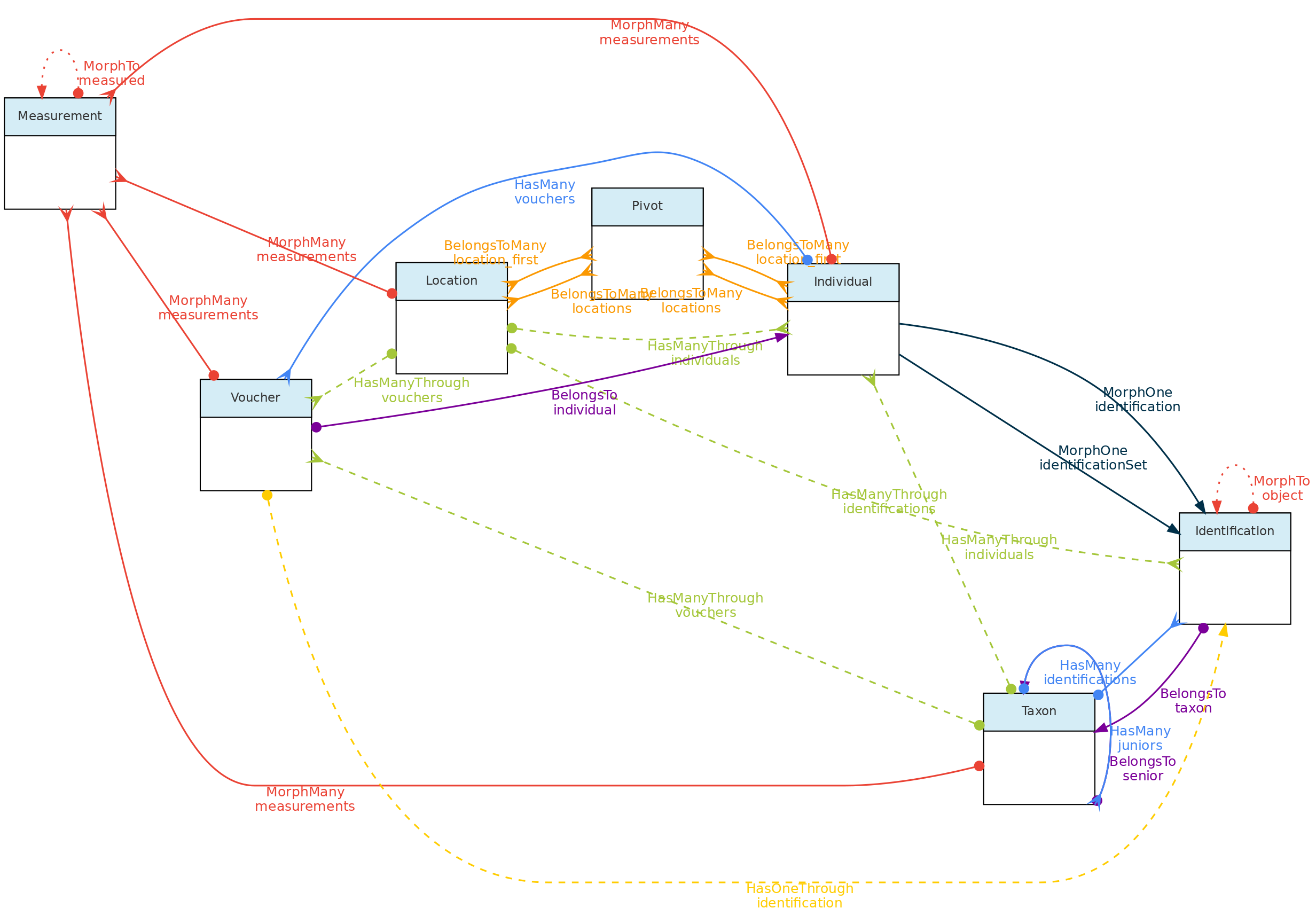

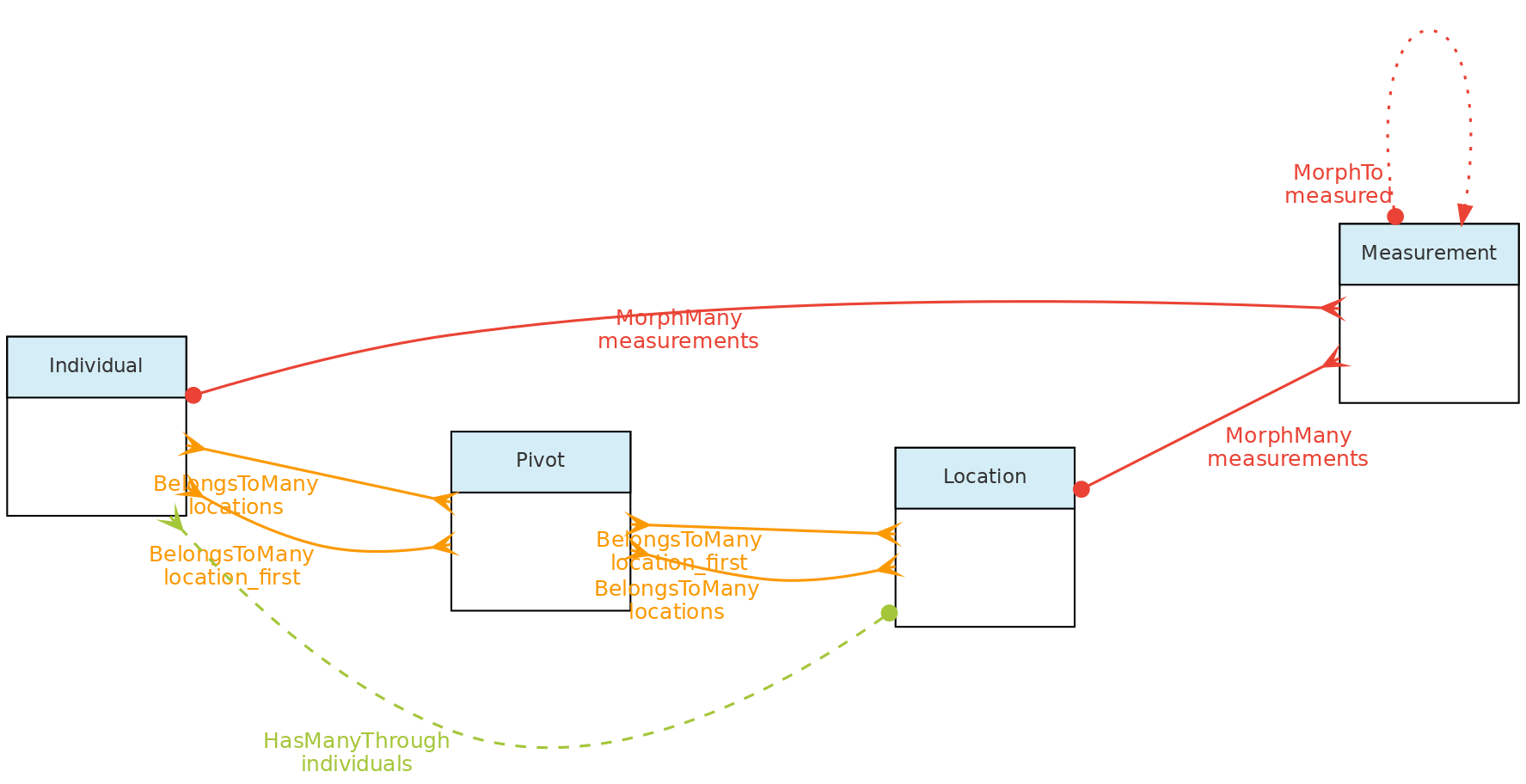

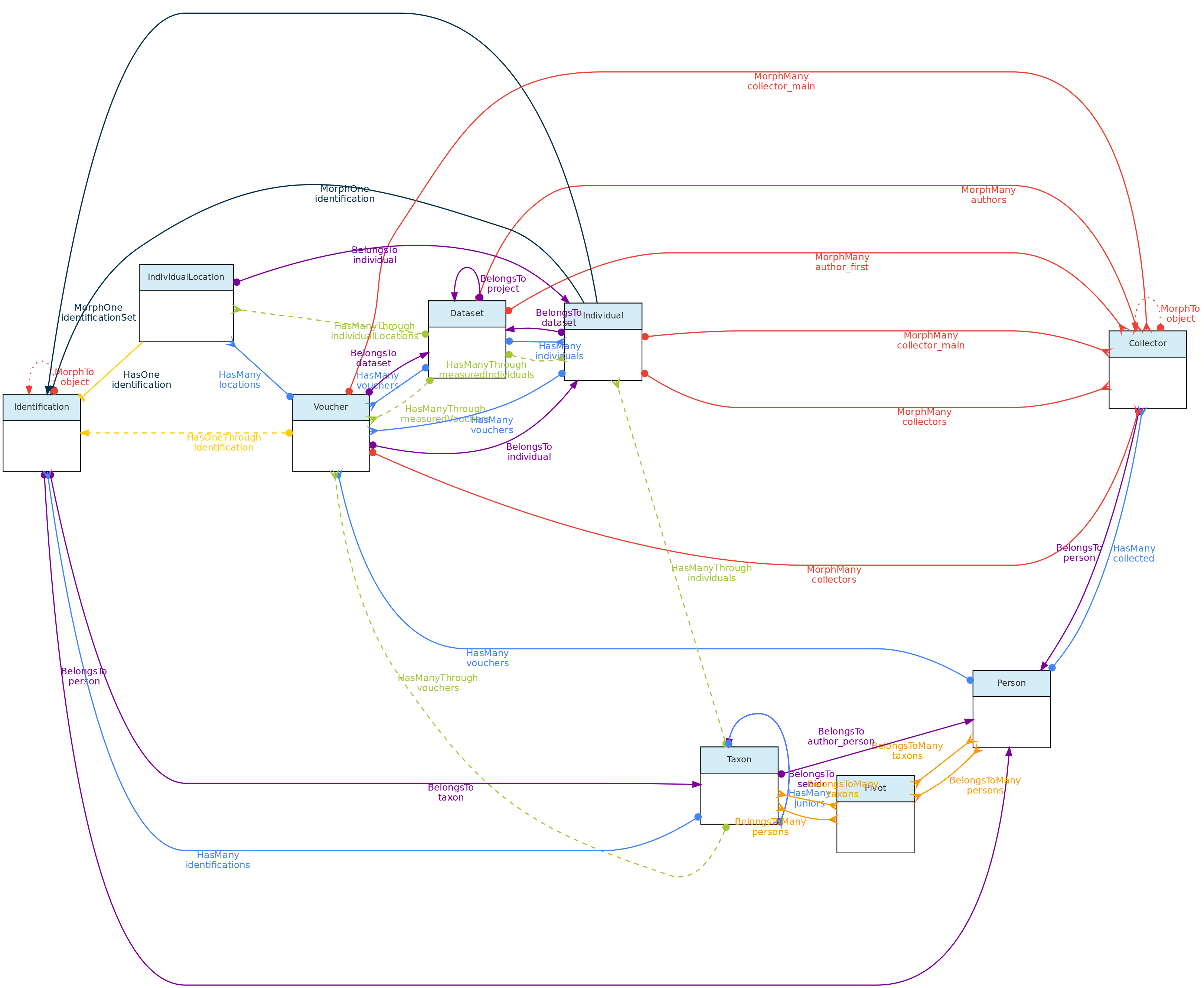

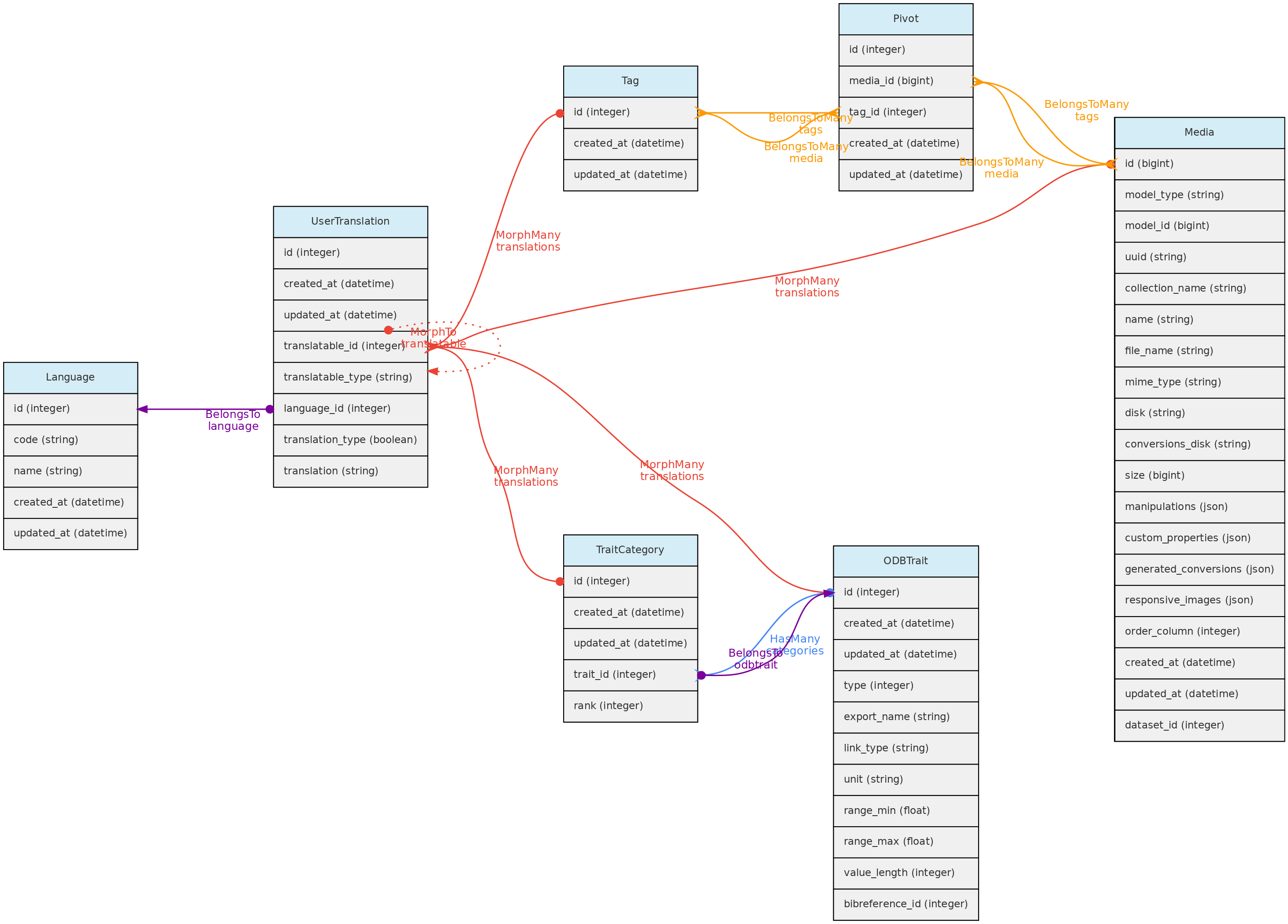

- 4.1: Core Objects

- 4.2: Trait Objects

- 4.3: Data Access Objects

- 4.4: Auxiliary Objects

- 5: Contribution Guidelines

- 6: Tutorials

- 6.1: Getting data with OpenDataBio-R

- 6.2: Import data with R

- 6.2.1: Import Locations

- 6.2.2: Import Taxons

- 6.2.3: Import Persons

- 6.2.4: Import Traits

- 6.2.5: Import Individuals & Vouchers

- 6.2.6: Import Measurements

1 - Overview

OpenDataBio is an opensource web-based platform designed to help researchers and organizations studying biodiversity in Tropical regions to collect, store, related and serve data. It is designed to accommodate many data types used in biological sciences and their relationships, particularly biodiversity and ecological studies, and serves as a data repository that allow users to download or request well-organized and documented research data.

Why?

Biodiversity studies frequently require the integration of a large amount of data, which require standardization for data use and sharing, and also continuous management and updates, particularly in Tropical regions where biodiversity is huge and poorly known.

OpenDataBio was designed based on the need to organize and integrate historical and current data collected in the Amazon region, taking into account field practices and data types used by ecologists and taxonomists.

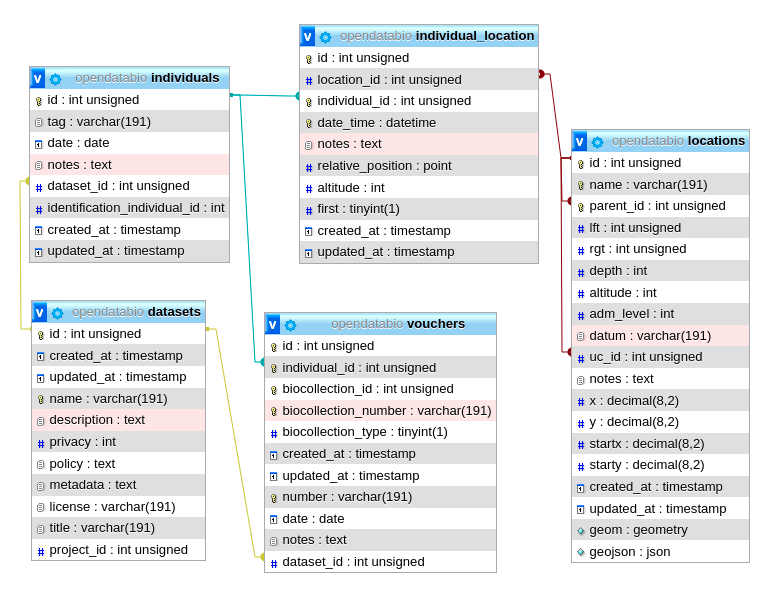

OpenDataBio aim to facilitate the standardization and normalization of data, utilizing different API services available online, giving flexibility to user and user groups, and creating the necessary links among Locations, Taxons, Individuals, Vouchers and the Measurements and Media-files associated with them, while offering accessibility to the data through an API service, facilitating data distribution and analyses.

Main features

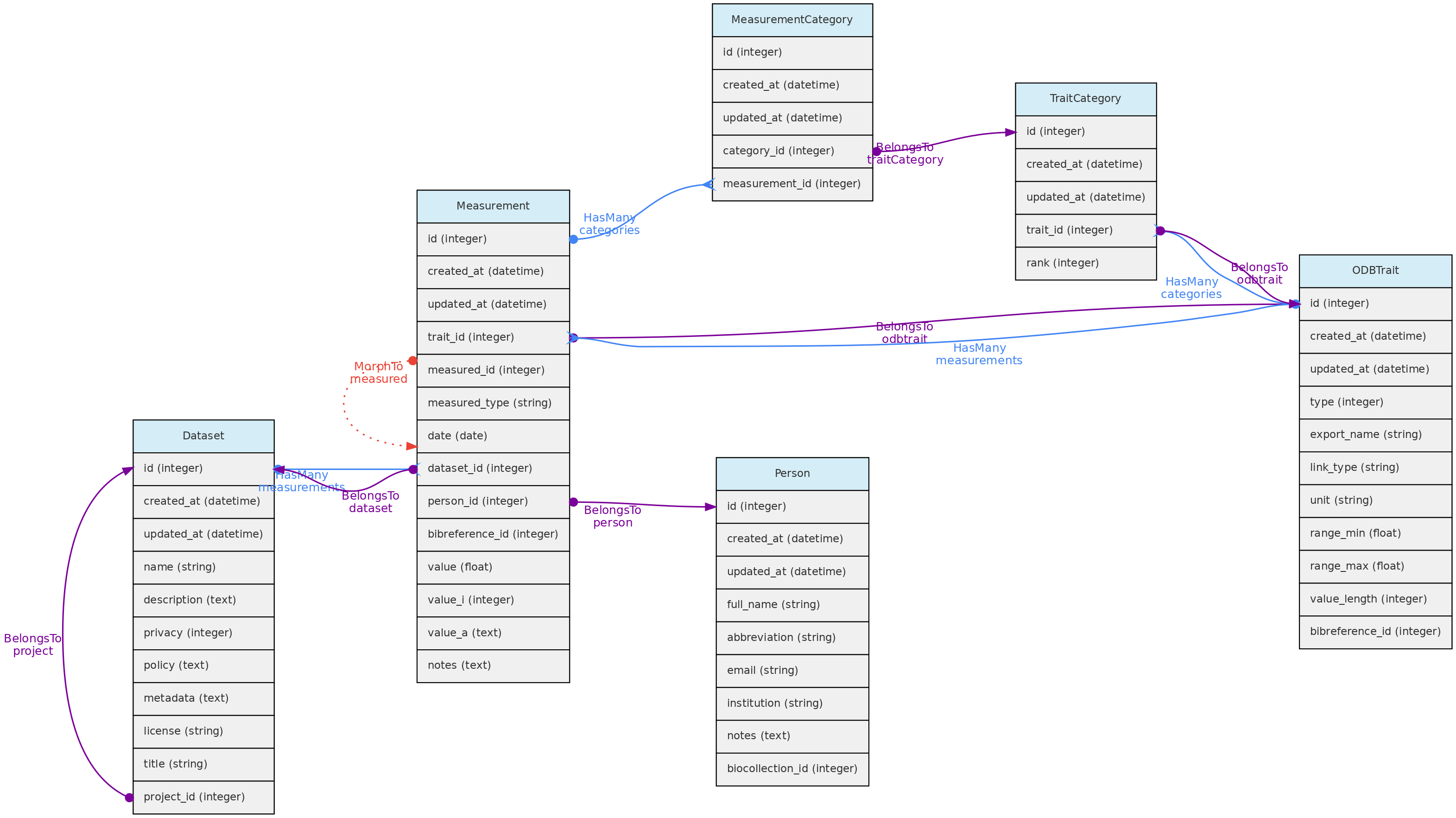

- Custom variables - the ability to define custom Traits, i.e. user defined variables of different types, including some special cases like Spectral Data, Colors, TaxonLinks and GeneBank. Measurements for such traits can be recorded for Individuals, Vouchers, Taxons and/or Locations.

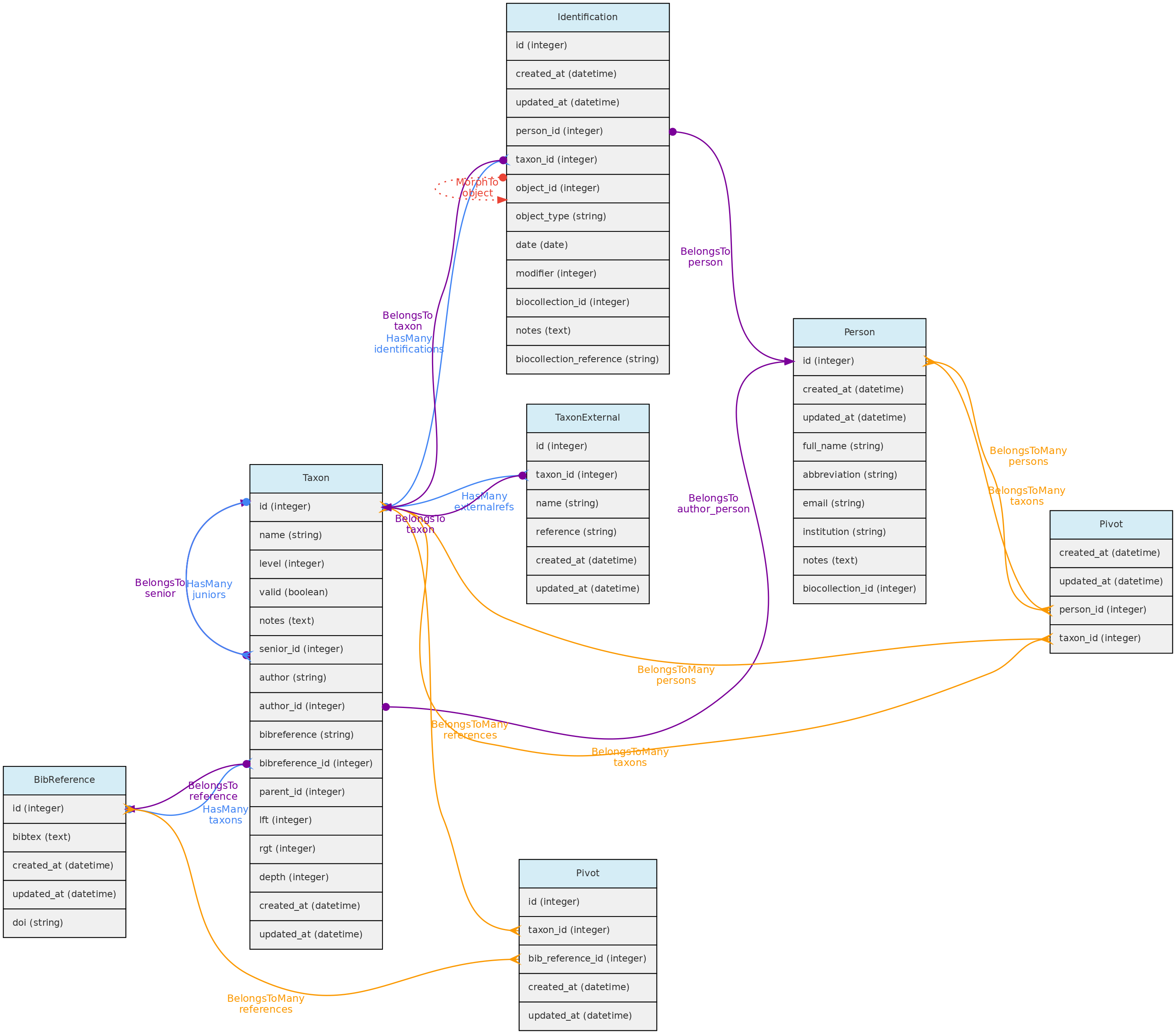

- Taxons can be published or unpublished names (e.g. a morphotype), synonyms or valid names, and any node of the tree of life may be stored. Taxon insertion are checked against different nomenclature data sources (Tropicos, IPNI, MycoBank,ZOOBANK, GBIF), minimizing your search for correct spelling, authorship and synonyms.

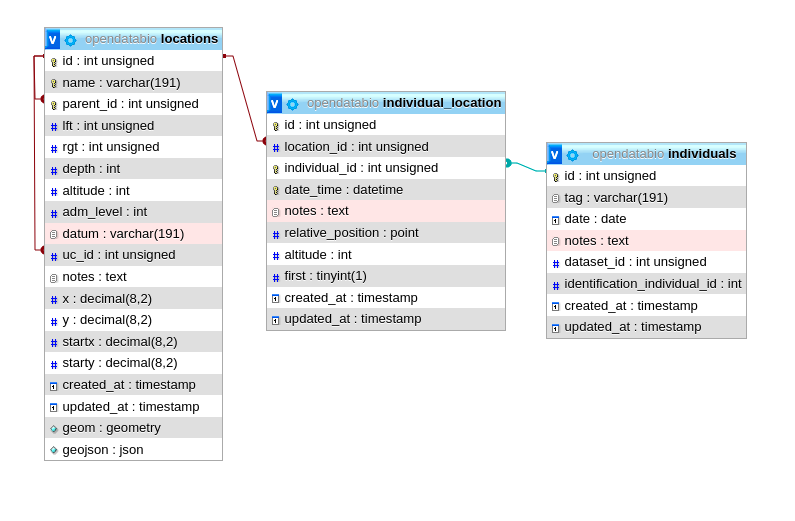

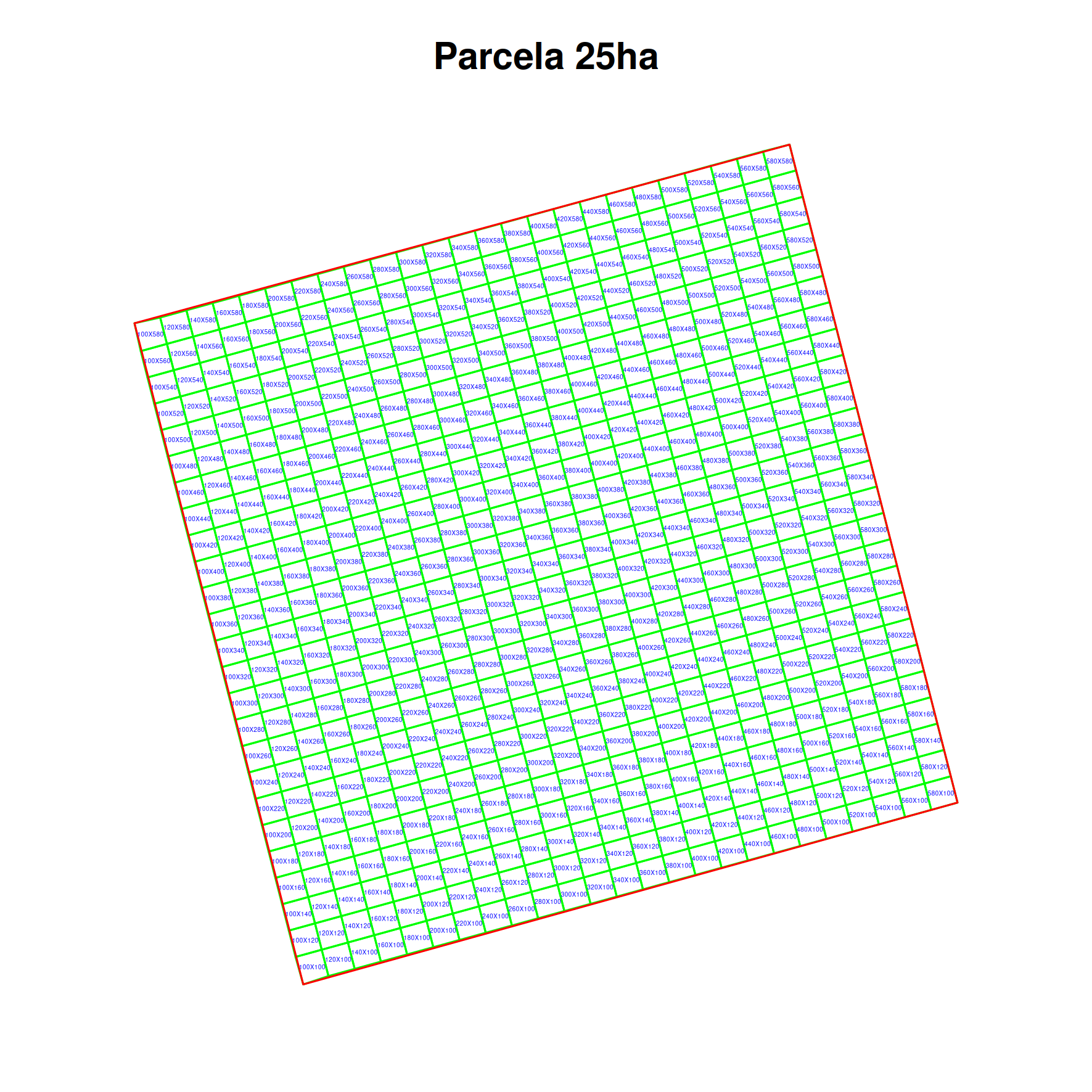

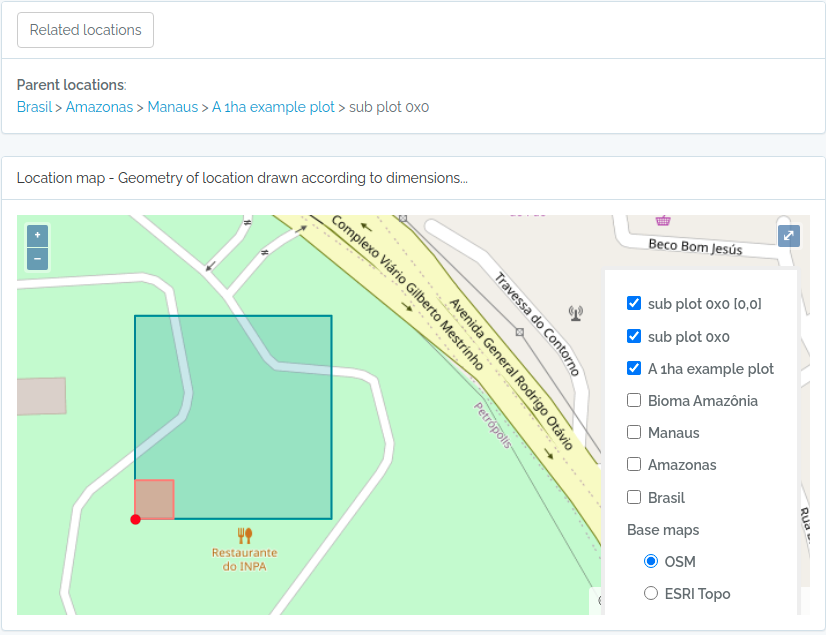

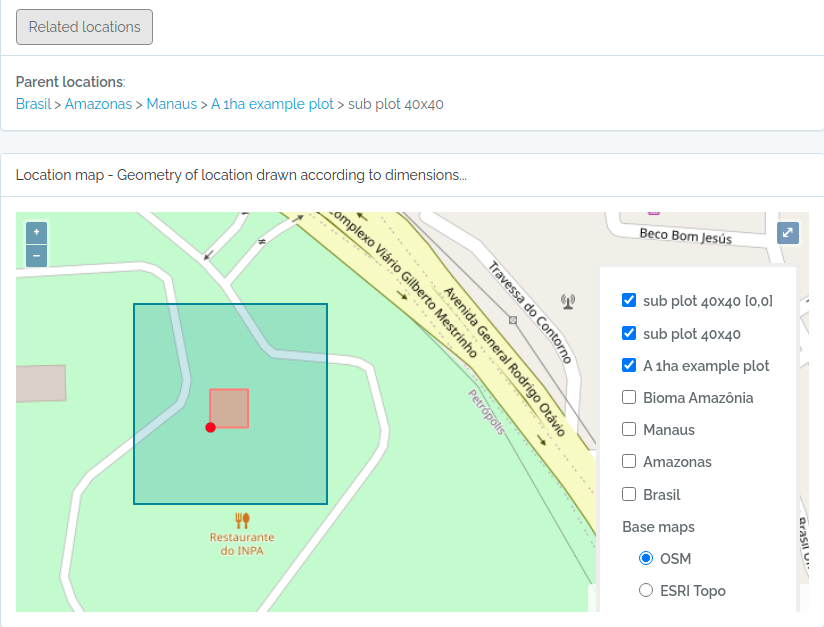

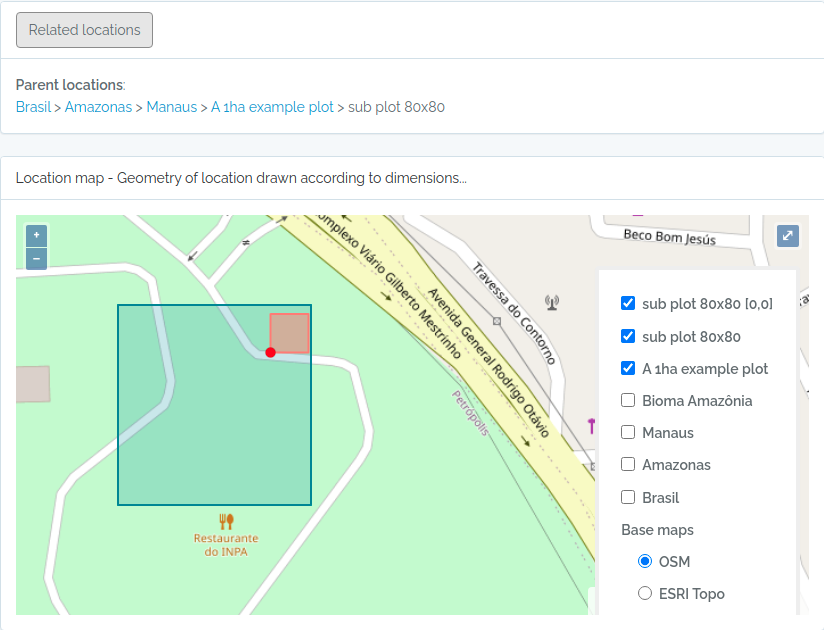

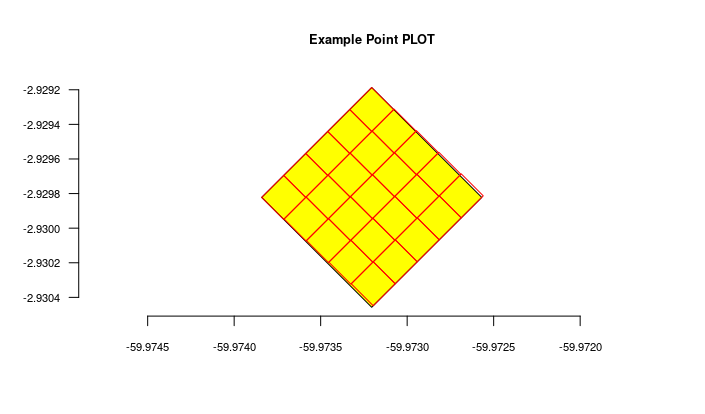

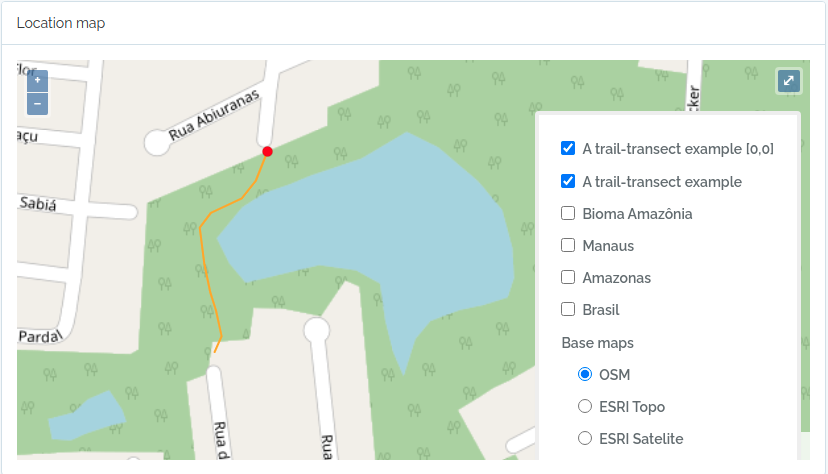

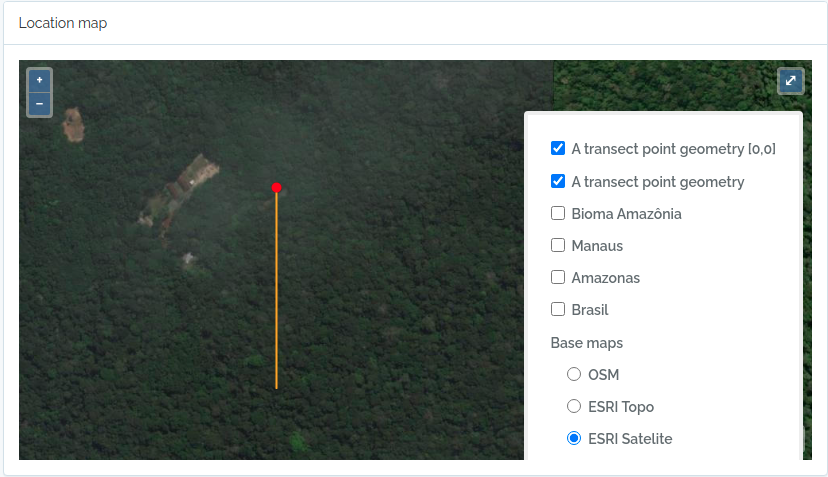

- Locations are stored with their spatial Geometries, allowing location parent detection and spatial queries. Special location types, such as Plots and Transects can be defined, facilitating commonly used methods in biodiversity studies

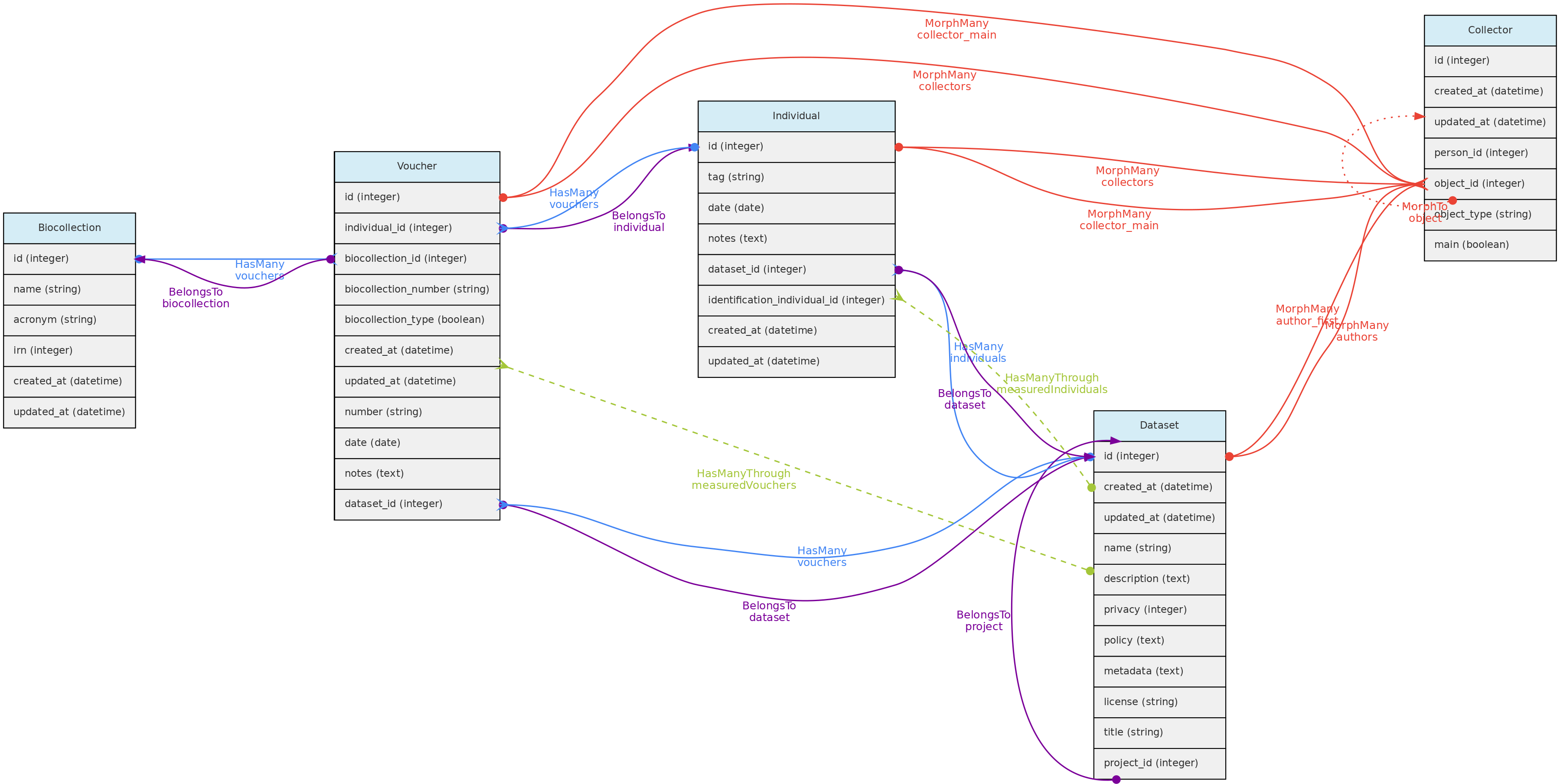

- Data access control - data are organized in Datasets that permits to define an access policy (public, non-public) and a license for distribution of public datasets, becoming a self-contained dynamic data publication, versioned by the last edit date.

- Different research groups may use a single OpenDataBio installation, having total control over their particular research data edition and access, while sharing common libraries such as Taxonomy, Locations, Bibliographic References and Trait definitions.

- API to access data programatically - Tools for data exports and imports are provided through API services along with a API client in the R language, the OpenDataBio-R package.

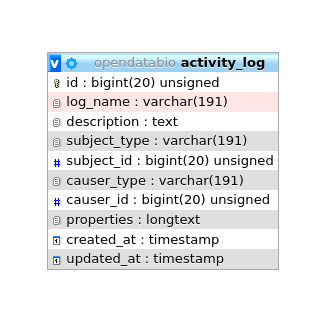

- Autiting - the Activity Model audits changes in any record and downloads of full datasets, which are logged for history tracking.

- The BioCollection model allows administrators of Biological Collections to manage their Voucher records as well as user-requests, facilitating the interaction with users providing samples and data.

- A mobile data collector is planned with ODK or ODK-X

Learn more

- Getting Started: install OpenDataBio!

- FAQs: Check out some example code!

2 - Getting Started

OpenDataBio is a web-based software supported in Debian, Ubuntu and Arch-Linux distributions of Linux and may be implemented in any Linux based machine. We have no plans for Windows support, but it may be easy to install in a windows machine using Docker.

Opendatabio is written in PHP and developed with the Laravel framework. It requires a web server (apache or nginx), PHP and a SQL database – tested only with MySQL and MariaDB.

You may install OpenDataBio easily using the Docker files included in the distribution, but these docker files provided are meant for development only and required tuning to deploy a production site.

If you just want to test OpenDataBio in your computer, follow the Docker Installation.

Prep for installation

- You may want to request a Tropicos.org API key for OpenDataBio to be able to retrieve taxonomic data from the Tropicos.org database. If not provided, mainly the GBIF nomenclatural service will be used;

- OpenDataBio sends emails to registered users, either to inform about a Job that has finished, to send data requests to dataset administrators or for password recovery. You may use a Google Email for this, but will need to change the account security options to allow OpenDataBio to use the account to send emails (you need to turn on the

Less secure app accessoption in the Gmail My Account Page and will need to create a cron job to keep this option alive). Therefore, create a dedicated email address for your installation. Check the “config/mail.php” file for more options on how to send e-mails.

2.1 - First time users

OpenDataBio is software to be used online. Local installations are for testing or development, although it could be used for a single-user production localhost environment.

User roles

Attention

If you have registered you need someone to assign you a full user role, so you can enter data.- If you are installing, the first login to an OpenDataBio installation must be done with the default super-admin user:

admin@example.organdpassword1. These settings should be changed or the installation will be open to anyone reading the docs; - Self-registrations only grant access to datasets with privacy set to

registered usersand allows user do download data of open-access, but do not allow the user to edit nor add data; - Only full users can contribute with data.

- But only super admin can grant

full-user roleto registered users - different OpenDataBio installations may have different policies as to how you may gain full-user access. Here is not the place to find that info.

See also User Model.

Prep your full-user account

- Register yourself as Person and assign it as your user default person, creating a link between your user and yourself as collector.

- You need at least a dataset to enter your own data

- When becoming a full-user, a restricted-access Dataset and Project will be automatically created for you (your Workspaces). You may modify these entities to fit your personal needs.

- You may create as many Projects and Datasets as needed. So, understand how they work and which data they control access to.

Entering data

There three main ways to import data into OpenDataBio:

- One by one through the web Interface

- Using the OpenDataBio POST API services:

- importing from a spreadsheet file (CSV, XLXS or ODS) using the web Interface

- using the OpenDataBio R package client

- When using the OpenDataBio API services you must prep your data or file to import according to the field options of the POST verb for the specific ’endpoint’ your are trying to import.

R Tutorials

See the Import data with R Tutorial for examples on how to import data with API.Tips for entering data

- If first time entering data, you should use the web interface and create at least one record for each model needed to fit your needs. Then play with the privacy settings of your Workspace Dataset, and check whether you can access the data when logged in and when not logged in.

- Use Dataset for a self-contained set of data that should be distributed as a group. Datasets are dynamic publications, have author, data, and title.

- Although ODB attempt to minimize redundancy, giving users flexibility comes with a cost, and some definitions, like that of Traits or Persons may receive duplicated entries. So, care must be taken when creating such records. Administrators may create a ‘code of conduct’ for the users of an ODB installation to minimize such redundancy.

- Follow an order for importation of new data, starting from the libraries of common use. For example, you should first register Locations, Taxons, Persons, Traits and any other common library before importing Individuals or Measurements

- There is no need to import POINT locations before importing Individuals because ODB creates the location for you when you inform latitude and longitude, and will detect for you to which parent location your individual belongs to. However, if you want to validate your points (understand where such point location will placed), you may use the Location API with

querytypeparameter specified for this. - There are different ways to create PLOT and TRANSECT locations - see here Locations if that is your case

- Creating Taxons require only the specification of a

name- ODB will search nomenclature services for you, find the name, metadata and parents and import all of the them if needed. If you are importing published names, just inform this single attribute. Else, if the name is unpublished, you need to inform additional fields. So, separate the batch importation of published and unpublished names into two sets. - The

notesfield of any model is for both plain text or JSON object string formatted data. The Json option allows you to store custom structured data any model having thenotes field. You may, for example, store as notes some secondary fields from original sources when importing data, but may store any additional data that is not provided by the ODB database structure. Such data will not be validate by ODB and standardization of both tags and values depends on you. Json notes will be imported and exported as a JSON string, and will be presented in the interface as a formatted table; URLs in your Json will be presented as links.

2.2 - Apache Installation

These instructions are for an apache-based installation, but can be easily tuned to work with nginx.

Server requirements

- The minimum supported PHP version is 8.0

- The web server may be apache or nginx. For nginx, check configuration in the docker files to tune these instructions, which are for apache.

- It requires a SQL database, MySQL and MariaDB have been tested, but may also work with Postgres. Tested with MYSQL.v8 and MariaDB.v15.1.

- PHP extensions required ‘openssl’, ‘pdo’, ‘pdo_mysql’, ‘mbstring’, ’tokenizer’, ‘xlm’, ‘dom’, ‘gd’, ’exif’, ‘bcmath’, ‘zip’

- Pandoc is used to translate LaTeX code used in the bibliographic references. It is not necessary for the installation, but it is suggested for a better user experience.

- Requires Supervisor, which is needed background jobs

Create Dedicated User

The recommended way to install OpenDataBio for production is using a dedicated system user. In this instructions this user is odbserver.

Download OpenDataBio

Login as your Dedicated User and download or clone this software to where you want to install it.

Here we assume this is /home/odbserver/opendatabio so that the installation files will reside in this directory. If this is not your path, change below whenever it applies.

Download OpenDataBio

Prep the Server

First, install the prerequisite software: Apache, MySQL, PHP, Pandoc and Supervisor. On a Debian system, you need to install some PHP extensions as well and enable them:

sudo apt-get install software-properties-common

sudo add-apt-repository ppa:ondrej/php

sudo add-apt-repository ppa:ondrej/php ppa:ondrej/apache2

sudo add-apt-repository ppa:ondrej/php

sudo add-apt-repository ppa:ondrej/apache2

sudo apt-get install mysql-server php8.0 libapache2-mod-php8.0 php8.0-intl \

php8.0-mysql php8.0-sqlite3 php8.0-gd php8.0-mysql php8.0-cli pandoc \

php8.0-mbstring php8.0-xml php8.0-gd php8.0-bcmath php8.0-zip php8.0-curl \

supervisor

sudo a2enmod php8.0

sudo phpenmod mbstring

sudo phpenmod xml

sudo phpenmod dom

sudo phpenmod gd

sudo a2enmod rewrite

sudo a2ensite

sudo systemctl restart apache2.service

#To check if they are installed:

php -m | grep -E 'mbstring|cli|xml|gd|mysql|pandoc|supervisord|bcmath|pcntl|zip'

Add the following to your Apache configuration.

- Change

/home/odbserver/opendatabioto your path (the files must be accessible by apache) - You may create a new file in the sites-available folder:

/etc/apache2/sites-available/opendatabio.confand place the following code in it.

touch /etc/apache2/sites-available/opendatabio.conf

echo '<IfModule alias_module>

Alias /opendatabio /home/odbserver/opendatabio/public/

Alias /fonts /home/odbserver/opendatabio/public/fonts

Alias /images /home/odbserver/opendatabio/public/images

<Directory "/home/odbserver/opendatabio/public">

Require all granted

AllowOverride All

</Directory>

</IfModule>' > /etc/apache2/sites-available/opendatabio.conf

This will cause Apache to redirect all requests for / to the correct folder, and also allow the provided .htaccess file to handle the rewrite rules, so that the URLs will be pretty. If you would like to access the file when pointing the browser to the server root, add the following directive as well:

RedirectMatch ^/$ /

Configure your php.ini file. The installer may complain about missing PHP extensions, so remember to activate them in both the cli (/etc/php/8.0/cli/php.ini) and the web ini (/etc/php/8.0/fpm/php.ini) files for PHP!

Update the values for the following variables:

Find files:

php -i | grep 'Configuration File'

Change in them:

memory_limit should be at least 512M

post_max_size should be at least 30M

upload_max_filesize should be at least 30M

Something like:

[PHP]

allow_url_fopen=1

memory_limit = 512M

post_max_size = 100M

upload_max_filesize = 100M

Enable the Apache modules ‘mod_rewrite’ and ‘mod_alias’ and restart your Server:

sudo a2enmod rewrite

sudo a2ensite

sudo systemctl restart apache2.service

Mysql Charset and Collation

- You should add the following to your configuration file (mariadb.cnf or my.cnf), i.e. the Charset and Collation you choose for your installation must match that in the ‘config/database.php’

[mysqld]

character-set-client-handshake = FALSE #without this, there is no effect of the init_connect

collation-server = utf8mb4_unicode_ci

init-connect = "SET NAMES utf8mb4 COLLATE utf8mb4_unicode_ci"

character-set-server = utf8mb4

log-bin-trust-function-creators = 1

sort_buffer_size = 4294967295 #this is needed for geometry (bug in mysql:8)

[mariadb] or [mysql]

max_allowed_packet=100M

innodb_log_file_size=300M #no use for mysql

- If using MariaDB and you still have problems of type #1267 Illegal mix of collations, then check here on how to fix that,

Configure supervisord

Configure Supervisor, which is required for jobs. Create a file name opendatabio-worker.conf in the Supervisor configuration folder /etc/supervisor/conf.d/opendatabio-worker.conf with the following content:

touch /etc/supervisor/conf.d/opendatabio-worker.conf

echo ";--------------

[program:opendatabio-worker]

process_name=%(program_name)s_%(process_num)02d

command=php /home/odbserver/opendatabio/artisan queue:work --sleep=3 --tries=1 --timeout=0 --memory=512

autostart=true

autorestart=true

user=odbserver

numprocs=8

redirect_stderr=true

stdout_logfile=/home/odbserver/opendatabio/storage/logs/supervisor.log

;--------------" > /etc/supervisor/conf.d/opendatabio-worker.conf

Folder permissions

Security

Folder and file permissions are important for securing a public server installation. If you don’t set them correctly, your site may be at risk.- Folders

storageandbootstrap/cachemust be writable by the Server user (usually www-data). Set0755permission to these directories. - Config

.envfile requires0640permission. - This link has different ways to set up permissions for files and folders of a Laravel application. Below the preferred method:

cd /home/odbserver

#give write permissions to odbserver user and the apache user

sudo chown -R odbserver:www-data opendatabio

sudo find ./opendatabio -type f -exec chmod 644 {} \;

sudo find ./opendatabio -type d -exec chmod 755 {} \;

#in these folders the server stores data and files.

#Make sure their permission is correct

cd /home/odbserver/opendatabio

sudo chgrp -R www-data storage bootstrap/cache

sudo chmod -R ug+rwx storage bootstrap/cache

#make sure the .env file has 640 permission

sudo chmod 640 ./.env

Install OpenDataBio

- Many Linux distributions (most notably Ubuntu and Debian) have different php.ini files for the command line interface and the Apache plugin. It is recommended to use the configuration file for Apache when running the install script, so it will be able to correctly point out missing extensions or configurations. To do so, find the correct path to the .ini file, and export it before using the

php installcommand.

For example,

export PHPRC=/etc/php/7.4/apache2/php.ini

-

The installation script will download the Composer dependency manager and all required PHP libraries listed in the

composer.jsonfile. However, if your server is behind a proxy, you should install and configure Composer independently. We have implemented PROXY configuration, but we are not using it anymore and have not tested properly (if you require adjustments, place an issue on GitLab). -

The script will prompt you configurations options, which are stored in the environment

.envfile in the application root folder.

You may, optionally, configure this file before running the installer:

- Create a

.envfile with the contents of the providedcp .env.example .env - Read the comments in this file and adjust accordingly.

- Run the installer:

cd /home/odbserver/opendatabio

php install

- Seed data - the script above will ask if you want to install seed data for Locations and Taxons - seed data is version specific. Check the seed data repository version notes.

Ready to go

If the install script finishes with success, you’re good to go! Point your browser to http://localhost/opendatabio. The database migrations include an administrator account, with loginadmin@example.org and password password1. Change the password after installing.

Installation issues

There are countless possible ways to install the application, but they may involve more steps and configurations.

- if you browser return 500|SERVER ERROR you should look to the last error in

storage/logs/laravel.log. If you have ERROR: No application encryption key has been specified run:

php artisan key:generate

php artisan config:cache

- If you receive the error “failed to open stream: Connection timed out” while running the installer, this indicates a misconfiguration of your IPv6 routing. The easiest fix is to disable IPv6 routing on the server.

- If you receive errors during the random seeding of the database, you may attempt to remove the database entirely and rebuild it. Of course, do not run this on a production installation.

php artisan migrate:fresh

- You may also replace the Locations and Taxons tables with seed data after a fresh migration using:

php seedodb

Post-install configs

- If your import/export jobs are not being processed, make sure Supervisor is running

systemctl start supervisord && systemctl enable supervisord, and check the log files atstorage/logs/supervisor.log. - You can change several configuration variables for the application. The most important of those are probably set

by the installer, and include database configuration and proxy settings, but many more exist in the

.envandconfig/app.phpfiles. In particular, you may want to change the language, timezone and e-mail settings. Runphp artisan config:cacheafter updating the config files. - In order to stop search engine crawlers from indexing your database, add the following to your “robots.txt” in your server root folder (in Debian, /var/www/html):

User-agent: *

Disallow: /

Storage & Backups

You may change storage configurations in config/filesystem.php, where you may define cloud based storage, which may be needed if have many users submitting media files, requiring lots of drive space.

- Data downloads are queue as jobs and a file is written in a temporary folder, and the file is deleted when the job is deleted by the user. This folder is defined as the

download diskin filesystem.php config file, which point tostorage/app/public/downloads. UserJobs web interface difficult navigation will force users to delete old jobs, but a cron cleaning job may be advisable to implement in your installation; - Media files are by default stored in the

media disk, which place files in folderstorage/app/public/media; - For regular configuration create both directories

storage/app/public/downloadsandstorage/app/public/mediawith writable permissions by the Server user, see below topic; - Remember to include media folder in a backup plan;

2.3 - Docker Installation

The easiest way to install and run OpenDataBio is using Docker and the docker configuration files provided, which contain all the needed configurations to run ODB. Uses nginx and mysql, and supervisor for queues

Docker files

laraverl-app/

----docker/*

----./env.docker

----docker-compose.yml

----Dockerfile

----Makefile

These are adapted from this link, where you find a production setting as well.

Installation

Download OpenDataBio

- Make sure you have Docker and Docker-compose installed in your system;

- Check if your user is in the docker group created during docker installation;

- Download or clone the OpenDataBio in your machine;

- Make sure your user is the owner of the opendatabio folder created and contents, else change ownership and group recursively to your user

- Enter the opendatabio directory

- Edit and adjust the environment file name

.env.docker(optional) - To install locally for development just adjust the following variables in the Dockerfile, which are needed to map the files owners to a docker user;

UIDthe numeric user your are logged in and which is the owner of all files and directories in the opendatabio directory.GDIthe numeric group the user belongs, usually same as UID.

- File

Makefilecontains shortcuts to the docker-compose commands used to build the services configured in thedocker-compose.ymland auxiliary files in thedockerfolder. - Build the docker containers using the shortcuts (read the Makefile to undersand the commands)

make build

- Start the implemented docker Services

make start

- See the containers and try log into the laravel container

docker ps

make ssh #to enter the container shell

make ssh-mysql #to enter the mysql container, where you may access the database shell using `mysql -uroot -p` or use the laravel user

- Install composer dependencies

make composer-install

- Migrate the database

make migrate

- You may also replace the Locations and Taxons tables with seed data:

make seed-odb

- If worked, then Opendatabio will be available in your browser http::/localhost:8080.

- Login with superuser

admin@example.organd passwordpassword1 - Additional configurations in these files are required for a production environment and deployment;

Data persistence

The docker images may be deleted without loosing any data. The mysql tables are stored in a volume. You may change to a local path bind.

docker volume list

Using

See the contents of Makefile

make stop

make start

make restart

docker ps

...

If you have issues and changed the docker files, you may need to rebuild:

#delete all images without loosing data

make stop

docker system prune -a #and accepts Yes

make build

make start

2.4 - Customize Installation

Simple changes that can be implemented in the layout of a OpenDataBio web site

Logo and BackGround Image

To replace the Navigation bar logo and the image of the landing page,

just put your image files replacing the files in /public/custom/ without changing their names.

Texts and Info

To change the welcome text of the landing page, change the values of the array keys in the following files:

/resources/lang/en/customs.php/resources/lang/pt/customs.php- Do not remove the entry keys. Set to null to suppress from appearing in the footer and landing page.

NavBar and Footer

- If you want to change the color of the top navigation bar and the footer,

just replace css Boostrap 5 class in the corresponding tags and files in folder

/resources/view/layout. - You may add additional html to the footer and navbar, change logo size, etc… as you wish.

3 - API services

Every OpenDataBio installation provide a API service, allowing users to GET data programmatically, and collaborators to POST new data into its database. The service is open access to public data, requires user authentication to POST data or GET data of restricted access.

The OpenDataBio API (Application Programming Interface -API) allows users to interact with an OpenDataBio database for exporting, importing and updating data without using the web-interface.

The OpenDataBio R package is a client for this API, allowing the interaction with the data repository directly from R and illustrating the API capabilities so that other clients can be easily built.

The OpenDataBio R package is a client for this API, allowing the interaction with the data repository directly from R and illustrating the API capabilities so that other clients can be easily built.

The OpenDataBio API allows querying of the database, data importation and data edition (update) through a REST inspired interface. All API requests and responses are formatted in JSON.

The API call

A simple call to the OpenDataBio API has four independent pieces:

- HTTP-verb - either

GETfor exports orPOSTfor imports. - base-URL - the URL used to access your OpenDataBio server + plus

/api/v0. For, example,http://opendatabio.inpa.gov.br/api/v0 - endpoint - represents the object or collection of objects that you want to access, for example, for querying taxonomic names, the endpoint is “taxons”

- request-parameters - represent filtering and processing that should be done with the objects, and are represented in the API call after a question mark. For example, to retrieve only valid taxonomic names (non synonyms) end the request with

?valid=1.

The API call above can be entered in a browser to GET public access data. For example, to get the list of valid taxons from an OpenDataBio installation the API request could be:

https://opendb.inpa.gov.br/api/v0/taxons?valid=1&limit=10

When using the OpenDataBio R package this call would be odb_get_taxons(list(valid=1)).

A response would be something like:

{

"meta":

{

"odb_version":"0.9.1-alpha1",

"api_version":"v0",

"server":"http://opendb.inpa.gov.br",

"full_url":"https://opendb.inpa.gov.br/api/v0/taxons?valid=1&limit1&offset=100"},

"data":

[

{

"id":62,

"parent_id":25,

"author_id":null,

"scientificName":"Laurales",

"taxonRank":"Ordem",

"scientificNameAuthorship":null,

"namePublishedIn":"Juss. ex Bercht. & J. Presl. In: Prir. Rostlin: 235. (1820).",

"parentName":"Magnoliidae",

"family":null,

"taxonRemarks":null,

"taxonomicStatus":"accepted",

"ScientificNameID":"http:\/\/tropicos.org\/Name\/43000015 | https:\/\/www.gbif.org\/species\/407",

"basisOfRecord":"Taxon"

}]}

API Authentication

- Not required for getting any data with public access in the ODB database, which by default includes locations, taxons, bibliographic references, persons and traits.

- Authentication Required to GET any data that is not of public access, and is required to POST and PUT data.

- Authentication is done using an

API token, that can be found under your user profile on the web interface. The token is assigned to a single database user, and should not be shared, exposed, e-mailed or stored in version controls. - To authenticate against the OpenDataBio API, use the token in the “Authorization” header of the API request. When using the R client, pass the token to the

odb_configfunctioncfg = odb_config(token="your-token-here"). - The token controls the data you can get and can edit

Users will only have access to the data for which the user has permission and to any data with public access in the database, which by default includes locations, taxons, bibliographic references, persons and traits. Measurements, individuals, and Vouchers access depends on permissions understood by the users token.

API versions

The OpenDataBio API follows its own version number. This means that the client can expect to use the same code and get the same answers regardless of which OpenDataBio version that the server is running. All changes done within the same API version (>= 1) should be backward compatible. Our API versioning is handled by the URL, so to ask for a specific API version, use the version number between the base URL and endpoint:

http://opendatabio.inpa.gov.br/opendatabio/api/v1/taxons

http://opendatabio.inpa.gov.br/opendatabio/api/v2/taxons

Version v0

The API version 0 (v0) is an unstable version. The first stable version will be the API version 1.3.1 - Quick reference

API call format

base-URL + ‘/api/v0/’ + endpoint + ‘?’ + request-parametersTaxons endpoint example

https://opendb.inpa.gov.br/api/v0/taxons?valid=1&limit=2&offset=10GET DATA (downloads)

Shared get-parameters

All endpoints share these GET parameters:

idreturn only the specified resource. May be number or a comma delimited list, such asapi/v0/locations?id=1,50,124limit: the number of items that should be returned (must be greater than 0). Example:api/v0/taxons?limit=10offset: the initial record to extract, to be used with limit when trying to download a large amount of data. Example:api/v0/taxons?offset=10000&limit=10000returns 10K records starting from the 10K position of the current query.fields: the field or fields that should be returned. Each endpoint has its own fields but there are two special words, simple (default) and all, which return different collection of fields.fields=allmay return sub-objects for each object.fields ='raw'will return the raw table, speeding up the search, although values may be more difficult to understand. Example:api/v0/taxons?fields=id,scientificName,validsave_job: for large data retrieval, if you addsave_job=1to the params list a job will be created for your search, and the data can then be obtained using the userjobs api.

Download large datasets?

Thelimit and offset parameters can be used to divide your search into parts. Alternatively, use the save_job=T option and then download the data with the get_file=T parameter from the userjobs API.

wildcards

Some parameters accept an asterisk as wildcard, soapi/v0/taxons?name=Euterpe will return taxons with name exactly as “Euterpe”, while api/v0/taxons?name=Eut* will return names starting with “Eut”.

Endpoint parameters

| Endpoint | Description | Possible parameters |

|---|---|---|

| / | Tests your access | none |

| bibreferences | Lists of bibliographic references | id, bibkey |

| biocollections | List of Biocollections and other vouchers Repositories | id |

| datasets | Lists registered datasets or downloand the files of dataset versions | id, list_versions, file_name |

| individuals | Lists registered individuals | id, location, location_root,taxon, taxon_root, tag,project, dataset |

| individual-locations | Lists occurrences for individuals | individual_id, location, location_root,taxon, taxon_root, dataset |

| languages | Lists registered languages | |

| measurements | Lists Measurements | id, taxon,dataset,trait,individual,voucher,location |

| locations | Lists locations | root, id, parent_id,adm_level, name, limit, querytype, lat, long,project,dataset |

| persons | Lists registered people | id, search, name, abbrev, email |

| projects | Lists registered projects | id only |

| taxons | Lists taxonomic names | root, id, name,level, valid, external, project,dataset |

| traits | Lists variables (traits) list | id, name |

| vouchers | Lists registered voucher specimens | id, number, individual, location, collector, location_root,taxon, taxon_root, project, dataset |

| userjobs | Lists user Jobs | id, status,get_file |

POST DATA (imports)

Web-interface note

- Importing data from files through the web-interface require specifying the POST verb parameters of the ODB API

- Batch imports of Bibliographic References and MediaFiles are possible only through the web interface.

| Endpoint | Description | POST Fields |

|---|---|---|

| biocollections | Import BioCollections | name, acronym |

| individuals | Import individuals | collector, tag, dataset, date, (location or latitude + longitude)**, altitude, location_notes, location_date_time, x, y, distance , angle , notes , taxon , identifier , identification_date , modifier, identification_notes , identification_based_on_biocollection, identification_based_on_biocollection_id , identification_individual |

| individual-locations | Import IndividualLocations | individual, (location or latitude + longitude), altitude, location_notes, location_date_time, x, y, distance, angle |

| locations | Import locations | name, adm_level, (geom or lat+long)** , parent, altitude, datum, x, y , startx, starty, notes, ismarine |

| measurements | Import Measurements to Datasets | dataset, date, object_type, object_id, person, trait_id, value**, link_id, bibreference, notes, duplicated,location, parent_measurement |

| persons | Imports a list of people | full_name**, abbreviation, email, institution, biocollection |

| traits | Import traits | export_name, type, objects, name, description**, units, range_min, range_max, categories, wavenumber_min and wavenumber_max, value_length, link_type, bibreference, tags |

| taxons | Imports taxonomic names | name**, level, parent, bibreference, author, author_id or person, valid, mobot, ipni, mycobank, zoobank, gbif |

| vouchers | Imports voucher specimens | individual, biocollection, biocollection_type, biocollection_number, number, collector, date, dataset, notes |

PUT DATA (updates)

Attention

Only the endpoints listed below can be updated using the API and only the listed PUT fields can be updated on each endpoint. Field values are as explained for the POST API endpoints, except that in all cases you must also provide theid of the record to be updated.

| Endpoint | Description | PUT Fields |

|---|---|---|

| individuals | Update Individuals | (id or individual_id),collector, tag, dataset, date, notes , taxon , identifier , identification_date , modifier, identification_notes , identification_based_on_biocollection, identification_based_on_biocollection_id , identification_individual |

| individual-locations | Update Individual Locations | (id or individual_location_id), individual, (location or latitude + longitude), altitude, location_notes, location_date_time, x, y, distance, angle |

| locations | Update Locations | (id or location_id), name, adm_level, (geom or lat+long) , parent, altitude, datum, x, y , startx, starty, notes, ismarine |

| measurements | Update Measurements | (id or measurement_id), dataset, date, object_type, object_type, person, trait_id, value, link_id, bibreference, notes, duplicated,location, parent_measurement |

| persons | Update Persons | (id or person_id),full_name, abbreviation, email, institution, biocollection |

| vouchers | Update Vouchers | (id or voucher_id),individual, biocollection, biocollection_type, biocollection_number, number, collector, date, dataset, notes |

Nomenclature types

| Nomenclature types numeric codes | |

|---|---|

| NotType : 0 | Isosyntype : 8 |

| Type : 1 | Neotype : 9 |

| Holotype : 2 | Epitype : 10 |

| Isotype : 3 | Isoepitype : 11 |

| Paratype : 4 | Cultivartype : 12 |

| Lectotype : 5 | Clonotype : 13 |

| Isolectotype : 6 | Topotype : 14 |

| Syntype : 7 | Phototype : 15 |

Taxon Level (Rank)

| Level | Level | Level | Level |

|---|---|---|---|

-100 clade |

60 cl., class |

120 fam., family |

210 section, sp., spec., species |

0 kingdom |

70 subcl., subclass |

130 subfam., subfamily |

220 subsp., subspecies |

10 subkingd. |

80 superord., superorder |

150 tr., tribe |

240 var., variety |

30 div., phyl., phylum, division |

90 ord., order |

180 gen., genus |

270 f., fo., form |

40 subdiv. |

100 subord. |

190 subg., subgenus, sect. |

3.2 - GET data

- The OpenDataBio R package is a client for this API.

- No authentication required to access data with a public access policy

- Authentication token required only to get data with a non-public access policy

Shared GET parameters

All endpoints share these GET parameters:

idreturn only the specified resource. May be number or a comma delimited list, such asapi/v0/locations?id=1,50,124limit: the number of items that should be returned (must be greater than 0). Example:api/v0/taxons?limit=10offset: the initial record to extract, to be used with limit when trying to download a large amount of data. Example:api/v0/taxons?offset=10000&limit=10000returns 10K records starting from the 10K position of the current query.fields: the field or fields that should be returned. Each endpoint has its own fields but there are two special words, simple (default) and all, which return different collection of fields.fields=allmay return sub-objects for each object.fields ='raw'will return the raw table, speeding up the search, although values may be more difficult to understand. Example:api/v0/taxons?fields=id,scientificName,validsave_job: for large data retrieval, if you addsave_job=1to the params list a job will be created for your search, and the data can then be obtained using the userjobs api.

Download large datasets?

Thelimit and offset parameters can be used to divide your search into parts. Alternatively, use the save_job=T option and then download the data with the get_file=T parameter from the userjobs API.

wildcards

Some parameters accept an asterisk as wildcard, soapi/v0/taxons?name=Euterpe will return taxons with name exactly as “Euterpe”, while api/v0/taxons?name=Eut* will return names starting with “Eut”.

BibReferences Endpoint

The

bibreferencesendpoint interact with the bibreference table. Their basic usage is getting the registered Bibliographic References.

GET request-parameters

id=listreturn only references having the id or ids provided (exid=1,2,3,10)bibkey=listreturn only references having the bibkey or bibkeys (exbibkey=ducke1953,mayr1992)taxon=list of idsreturn only references linked to the taxon informed.limitandoffsetlimit query. see Common parameters.

Response fields

id- the id of the BibReference in the bibreferences table (a local database id)bibkey- the bibkey used to search and use of the reference in the web systemyear- the publication yearauthor- the publication authorstitle- the publication titledoi- the publication DOI if presenturl- an external url for the publication if presentbibtex- the reference citation record in BibTex format

Datasets Endpoint

The

datasetsendpoint interacts with the Datasets table and with static versions of Datasets. Useful for getting dataset_ids to import measurements, individuals, vouchers and media. You can also download files of static versions of the data, if any were generated by dataset administrators. This allows access to data from datasets in a faster way. Access to the data will depend on whether the version has a public license, or whether the user is a contributor, administrator or viewer of the dataset.

GET request-parameters

id=listreturn only datasets having the id or ids provided (exid=1,2,3,10)list_versions = booleanreturns the list of files and versions for one or moreiddatasets (egid='1,2,3,4',list_versions=1)file_name = stringreturns the data of a single file indicated by this parameter, as indicated in the list of versions returned (egid='1',file_name='2_Organisms.csv')

Response fields

With list_versions=1

dataset_id- the id of the dataset in the dataset table (a local database id)dataset- the name of the datasetversion- the name of the static version of the datasetlicense- the CreativeCommons license of the data versionaccess- indicating if it is OpenAccess or if the user has accessget_params- how to inform theparamsargument in the odb_get_datasets() function of the R package to get the data in files

With file_name

- Returns data from the given file

Just id or nothing

id- the id of the Dataset in the datasets table (a local database id)name- the name of the datasetprivacyLevel- the access level for the datasetcontactEmail- the dataset administrator emaildescription- a description of the datasetpolicy- the data policy if specifiedmeasurements_count- the number of measurements in the datasettaggedWidth- the list of tags applied to the dataset

Biocollections Endpoint

The

biocollectionsendpoint interact with the biocollections table. Their basic usage is getting the list of the Biological Collections registered in the database. Using for gettingbiocollection_idor validating your codes for importing data with the Vouchers or Individuals endpoints.

GET request-parameters

id=listreturn only ‘biocollections’ having the id or ids provided (exid=1,2,3,10)acronymreturn only ‘biocollections’ having the acronym or acronym provided (exacronym=INPA,SP,NY)

Response fields

id- the id of the repository or museum in the biocollections table (a local database id)name- the name of the repository or museumacronym- the repository or museum acronymirn- only for Herbaria, the number of the herbarium in the Index Herbariorum

Individuals Endpoint

The

individualsendpoints interact with the Individual table. Their basic usage is getting a list of individuals.

GET request-parameters

id=number or list- return individuals that have id or ids ex:id=2345,345location=mixed- return by location id or name or ids or names ex:location=24,25,26location=Parcela 25haof the locations where the individualslocation_root- same as location but return also from the descendants of the locations informedtaxon=mixed- the id or ids, or canonicalName taxon names (fullnames) ex:taxon=Aniba,Ocotea guianensis,Licaria cannela tenuicarpaortaxon=456,789,3,4taxon_root- same as taxon but return also all the individuals identified as any of the descendants of the taxons informedproject=mixed- the id or ids or names of the project, ex:project=3orproject=OpenDataBiotag=list- one or more individual tag number/code, ex:tag=individuala1,2345,2345Adataset=mixed- the id or ids or names of the datasets, return individuals having measurements in the datasets informedlimitandoffsetare SQL statements to limit the amount of data when trying to download a large number of individuals, as the request may fail due to memory constraints. See Common parameters.

Note

Notice that all search fields (taxon, location and dataset) may be specified as names (eg, “taxon=Euterpe edulis”) or as database ids. If a list is specified for one of these fields, all items of the list must be of the same type, i.e. you cannot search for ’taxon=Euterpe,24’. Also, location and taxon have priority over location_root and taxon_root if both informed.Response fields

id- the ODB id of the Individual in the individuals table (a local database id)basisOfRecordDWC - will be always ‘organism’ [dwc organism](DWCorganismIDDWC - a local unique combination of record info, composed of recordNumber,recordedByMain,locationNamerecordedByDWC - pipe “|” separated list of registered Persons abbreviationsrecordedByMain- the first person in recordedBy, the main collectorsrecordNumberDWC - an identifier for the individual, may be the code in a tree aluminum tag, a bird band code, a collector numberrecordedDateDWC - the record datescientificNameDWC - the current taxonomic identification of the individual (no authors) or “unidentified”scientificNameAuthorshipDWC - the taxon authorship. For taxonomicStatus unpublished: will be a ODB registered Person namefamilyDWCgenusDWCidentificationQualifierDWC - identification name modifiers cf. aff. s.l., etc.identifiedByDWC - the Person identifying the scientificName of this recorddateIdentifiedDWC - when the identification was made (may be incomplete, with 00 in the month and or day position)identificationRemarksDWC - any notes associated with the identificationlocationName- the location name (if plot the plot name, if point the point name, …)locationParentName- the immediate parent locationName, to facilitate use when location is subplothigherGeographyDWC - the parent LocationName ‘|’ separated (e.g. Brasil | Amazonas | Rio Preto da Eva | Fazenda Esteio | Reserva km37 | Manaus ForestGeo-PDBFF Plot | Quadrat 100x100 );decimalLatitudeDWC - depends on the location adm_level and the individual X and Y, or Angle and Distance attributes, which are used to calculate these global coordinates for the record; if individual has multiple locations (a monitored bird), the last location is obtained with this get APIdecimalLongitudeDWC - same as for decimalLatitudex- the individual X position in a Plot locationy- the individual Y position in a Plot locationgx- the individual global X position in a Parent Plot location, when location is subplot (ForestGeo standards)gy- the individual global Y position in a Parent Plot location, when location is subplot (ForestGeo standards)angle- the individual azimuth direction in relation to a POINT reference, either when adm_level is POINT or when X and Y are also provided for a Plot location this is calculated from X and Y positionsdistance- the individual distance direction in relation to a POINT reference, either when adm_level is POINT or when X and Y are also provided for a Plot location this is calculated from X and Y positionsorganismRemarksDWC - any note associated with the Individual recordassociatedMediaDWC - urls to ODB media files associated with the recorddatasetName- the name of the ODB Dataset to which the record belongs to DWCaccessRights- the ODB Dataset access privacy setting - DWCbibliographicCitation- the ODB Dataset citation - DWClicense- the ODB Dataset license - DWC

Individual-locations Endpoint

The

individual-locationsendpoint interact with the individual_location table. Their basic usage is getting location data for individuals, i.e. occurrence data for organisms. Designed for occurrences of organisms that move and have multiple locations, else the same info is retrieved with the Individuals endpoint.

GET request-parameters

individual_id=number or list- return locations for individuals that have id or ids ex:id=2345,345location=mixed- return by location id or name or ids or names ex:location=24,25,26location=Parcela 25haof the locations where the individualslocation_root- same as location but return also from the descendants of the locations informedtaxon=mixed- the id or ids, or canonicalName taxon names (fullnames) ex:taxon=Aniba,Ocotea guianensis,Licaria cannela tenuicarpaortaxon=456,789,3,4taxon_root- same as taxon but return also all the the locations for individuals identified as any of the descendants of the taxons informeddataset=mixed- the id or ids or names of the datasets, return individuals belonging to the datasets informedlimitandoffsetare SQL statements to limit the amount of data when trying to download a large number of individuals, as the request may fail due to memory constraints. See Common parameters.

Note

Notice that all search fields (taxon, location and dataset) may be specified as names (eg, “taxon=Euterpe edulis”) or as database ids. If a list is specified for one of these fields, all items of the list must be of the same type, i.e. you cannot search for ’taxon=Euterpe,24’. Also, location and taxon have priority over location_root and taxon_root if both informed.Response fields

individual_id- the ODB id of the Individual in the individuals table (a local database id)location_id- the ODB id of the Location in the locations table (a local database id)basisOfRecord- will be always ‘occurrence’ - DWC and [dwc occurrence](DWC;occurrenceID- the unique identifier for this record, the individual+location+date_time - DWCorganismID- the unique identifier for the Individual DWCrecordedDate- the occurrence date+time observation - DWClocationName- the location name (if plot the plot name, if point the point name, …)higherGeography- the parent LocationName ‘|’ separated (e.g. Brasil | Amazonas | Rio Preto da Eva | Fazenda Esteio | Reserva km37 | Manaus ForestGeo-PDBFF Plot | Quadrat 100x100 ) - DWCdecimalLatitude- depends on the location adm_level and the individual X and Y, or Angle and Distance attributes, which are used to calculate these global coordinates for the record - DWCdecimalLongitude- same as for decimalLatitude - DWCgeoreferenceRemarks- will contain the explanation of the type of decimalLatitude - DWCx- the individual X position in a Plot locationy- the individual Y position in a Plot locationangle- the individual azimuth direction in relation to a POINT reference, either when adm_level is POINT or when X and Y are also provided for a Plot location this is calculated from X and Y positionsdistance- the individual distance direction in relation to a POINT reference, either when adm_level is POINT or when X and Y are also provided for a Plot location this is calculated from X and Y positionsminimumElevation- the altitude for this occurrence record if any - DWCoccurrenceRemarks- any note associated with this record - DWCscientificName- the current taxonomic identification of the individual (no authors) or “unidentified” - DWCfamily- the current taxonomic family name, if apply - DWCdatasetName- the name of the ODB Dataset to which the record belongs to - DWCaccessRights- the ODB Dataset access privacy setting - DWCbibliographicCitation- the ODB Dataset citation - DWClicense- the ODB Dataset license DWC

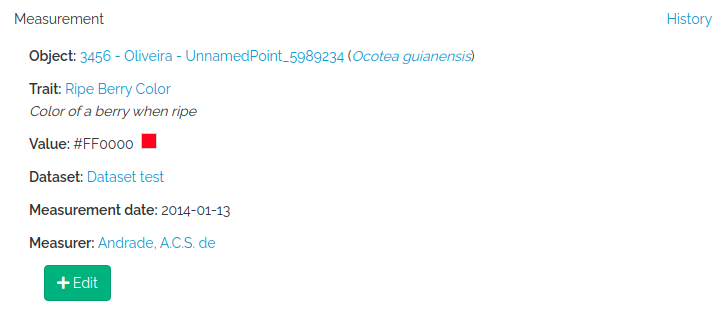

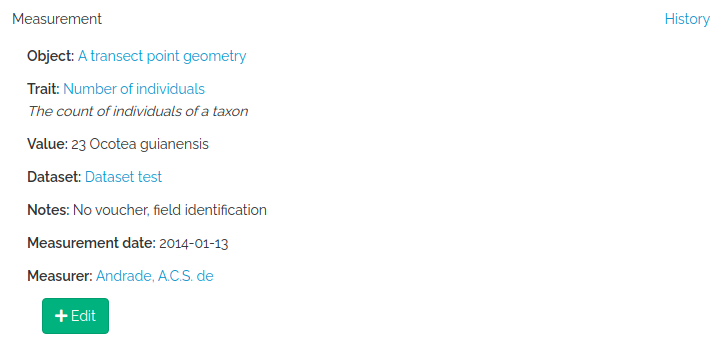

Measurements Endpoint

The

measurementsendpoint interact with the measurements table. Their basic usage is getting Data linked to Individuals, Taxons, Locations or Vouchers, regardless of datasets, so it is useful when you want particular measurements from different datasets that you have access for. If you want a full dataset, you may just use the web interface, as it prepares a complete set of the dataset measurements and their associated data tables for you.

GET request-parameters

id=list of idsreturn only the measurement or measurements having the id or ids provided (exid=1,2,3,10)taxon=list of ids or namesreturn only the measurements related to the Taxons, both direct taxon measurements and indirect taxon measurements from their individuals and vouchers (extaxon=Aniba,Licaria). Does not consider descendants taxons for this usetaxon_rootinstead. In the example only measurements directly linked to the genus and genus level identified vouchers and individuals will be retrieved.taxon_root=list of ids or namessimilar totaxon, but get also measurements for descendants taxons of the informed query (extaxon=Lauraceaewill get measurements linked to Lauraceae and any taxon that belongs to it;dataset=list of idsreturn only the measurements belonging to the datasets informed (exdataset=1,2) - allows to get all data from a dataset.trait=list of ids or export_namesreturn only the measurements for the traits informed (extrait=DBH,DBHpomordataset=2?trait=DBH) - allows to get data for a particular traitindividual=list of individual idsreturn only the measurements for the individual ids informed (exindividual=1000,1200)voucher=list of voucher idsreturn only the measurements for the voucher ids informed (exvoucher=345,321)location=list of location idsreturn only measurements for the locations ids informed (exlocation=4,321)- does not retrieve measurements for individuals and vouchers in those locations, only measured locations, like plot soil surveys data.limitandoffsetare SQL statements to limit the amount of data when trying to download a large number of measurements, as the request may fail due to memory constraints. See Common parameters.

Response fields

id- the Measurement ODB id in the measurements table (local database id)basisOfRecordDWC - will be always ‘MeasurementsOrFact’ [dwc measurementorfact](DWCmeasured_type- the measured object, one of ‘Individual’, ‘Location’, ‘Taxon’ or ‘Voucher’measured_id- the id of the measured object in the respective object table (individuals.id, locations.id, taxons.id, vouchers.id)measurementIDDWC - a unique identifier for the Measurement record - combine measured resourceRelationshipID, measurementType and datemeasurementTypeDWC - the export_name for the ODBTrait measuredmeasurementValueDWC - the value for the measurement - will depend on kind of the measurementType (i.e. ODBTrait)measurementUnitDWC - the unit of measurement for quantitative traitsmeasurementDeterminedDateDWC - the Measurement measured datemeasurementDeterminedByDWC - Person responsible for the measurementmeasurementRemarksDWC - text note associated with this Measurement recordresourceRelationshipDWC - the measured object (resource) - one of ’location’,’taxon’,‘organism’,‘preservedSpecimen’resourceRelationshipIDDWC - the id of the resourceRelationshiprelationshipOfResourceDWC - will always be ‘measurement of’scientificNameDWC - the current taxonomic identification (no authors) or ‘unidentified’ if the resourceRelationship object is not ’location’familyDWC - taxonomic family name if appliesdatasetName- the name of the ODB Dataset to which the record belongs to - DWCaccessRights- the ODB Dataset access privacy setting - DWCbibliographicCitation- the ODB Dataset citation - DWClicense- the ODB Dataset license - DWCmeasurementLocationId- the ODB id of the location associated with the measurementmeasurementParentId- the ODB id of another related measurement (the parent measurement to which the current depends upon)decimalLatitudeDWC - a latitude em graus decimais da medição ou do objeto medido.decimalLongitudeDWC - a longitude em graus decimais da medição ou do objeto medido.

Media Endpoint

The

mediaendpoint interact with the media table. Their basic usage is getting the metadata associated with MediaFiles and the files URL.

GET request-parameters

individual=number or list- return media associated with the individuals having id or ids ex:id=2345,345voucher=number or list- return media associated with the vouchers having id or ids ex:id=2345,345location=mixed- return media associated with the locations having id or name or ids or names ex:location=24,25,26location=Parcela 25halocation_root- same as location but return also media associated with the descendants of the locations informedtaxon=mixed- the id or ids, or canonicalName taxon names (fullnames) ex:taxon=Aniba,Ocotea guianensis,Licaria cannela tenuicarpaortaxon=456,789,3,4taxon_root- same as taxon but return also all the locations for media related to any of the descendants of the taxons informeddataset=mixed- the id or ids or names of the datasets, return belonging to the datasets informedlimitandoffsetare SQL statements to limit the amount of data when trying to download a large number of individuals, as the request may fail due to memory constraints. See Common parameters.

Note

Notice that all search fields (taxon, location and dataset) may be specified as names (eg, “taxon=Euterpe edulis”) or as database ids. If a list is specified for one of these fields, all items of the list must be of the same type, i.e. you cannot search for ’taxon=Euterpe,24’. Also, location and taxon have priority over location_root and taxon_root if both informed.Response fields

id- the Measurement ODB id in the measurements table (local database id)basisOfRecordDWC - will be always ‘MachineObservation’ DWCmodel_type- the related object, one of ‘Individual’, ‘Location’, ‘Taxon’ or ‘Voucher’model_id- the id of the related object in the respective object table (individuals.id, locations.id, taxons.id, vouchers.id)resourceRelationshipDWC - the related object (resource) - one of ’location’,’taxon’,‘organism’,‘preservedSpecimen’resourceRelationshipIDDWC - the id of the resourceRelationshiprelationshipOfResourceDWC - will be the dwcTyperecordedByDWC - pipe “|” separated list of registered Persons abbreviationsrecordedDateDWC - the media file datescientificNameDWC - the current taxonomic identification of the individual (no authors) or “unidentified”familyDWCdwcTypeDWC - one of StillImage, MovingImage, SounddatasetName- the name of the ODB Dataset to which the record belongs to DWCaccessRights- the ODB Dataset access privacy setting - DWCbibliographicCitation- the ODB Dataset citation - DWClicense- the ODB Dataset license - DWCfile_name- the file namefile_url- the url to the file

Languages EndPoint

The

languagesendpoint interact with the Language table. Their basic usage is getting a list of registered Languages to import User Translations like Trait and TraitCategories names and descriptions.

Response fields

id- the id of the language in the languages table (a local database id)code- the language string code;name- the language name;

Locations Endpoint

The

locationsendpoints interact with the locations table. Their basic usage is getting a list of registered countries, cities, plots, etc, or importing new locations.

GET request-parameters

id=listreturn only locations having the id or ids provided (exid=1,2,3,10)adm_level=numberreturn only locations for the specified level or type:- 2 for country; 3 for first division within country (province, state); 4 for second division (e.g. municipality)… up to adm_level 10 as administrative areas (Geometry: polygon, MultiPolygon);

- 97 is the code for Environmental Layers (Geometry: polygon, multipolygon);

- 98 is the code for Indigenous Areas (Geometry: polygon, multipolygon);

- 99 is the code for Conservation Units (Geometry: polygon, multipolygon);

- 100 is the code for plots and subplots (Geometry: polygon or point);

- 101 for transects (Geometry: point or linestring)

- 999 for any ‘POINT’ locations like GPS waypoints (Geometry: point);

name=stringreturn only locations whose name matches the search string. You may use asterisk as a wildcard. Example:name=Manausorname=*Ducke*to find name that has the word Ducke;parent_id=listreturn the locations for which the direct parent is in the list (ex: parent_id=2,3)root=numbernumber is the locationidto search, returns the location for the specified id along with all of its descendants locations; example: find the id for Brazil and use its id as root to get all the locations belonging to Brazil;querytypeone of “exact”, “parent” or “closest” and must be provided withlatandlong:- when

querytype=exactwill find a point location that has the exact match of thelatandlong; - when

querytype=parentwill find the most inclusive parent location within which the coordinates given bylatandlongfall; - when

querytype=closestwill find the closest location to the coordinates given bylatandlong; It will only search for closest locations havingadm_level > 99, see above. latandlongmust be valid coordinates in decimal degrees (negative for South and West);

- when

fields=listspecify which fields you want to get with your query (see below for field names), or use options ‘all’ or ‘simple’, to get full set and the most important columns, respectivelyproject=mixed- id or name of project (may be a list) return the locations belonging to one or more Projectsdataset=mixed- id or name of a dataset (may be a list) return the locations belonging to one or more Datasets

Notice that id, search, parent and root should probably not be combined in the same query.

Response fields

id- the ODB id of the Location in the locations table (a local database id)basisOfRecordDWC - will always contain ’location’ [dwc location](DWClocationName- the location name (if country the country name, if state the state name, etc…)adm_level- the numeric value for the ODB administrative level (2 for countries, etc)levelName- the name of the ODB administrative levelparent_id- the ODB id of the parent locationparentName- the immediate parent locationNamehigherGeographyDWC - the parent LocationName ‘|’ separated (e.g. Brasil | São Paulo | Cananéia);footprintWKTDWC - the WKT representation of the location; if adm_level==100 (plots) or adm_level==101 (transects) and they have been informed as a POINT location, the respective polygon or linestring geometries, the footprintWKT will be that generated using the location’s x and y dimensions.xandy- (meters) when location is a plot (100 == adm_level) its X and Y dimensions, if a transect (101 == adm_level), x may be the length and y may be a buffer dimension around the linestring.startxandstarty- (meters) when location is a subplot (100 == adm_level with parent also adm_level==100), the X and Y start position in relation to the 0,0 coordinate of the parent plot location, which is either a Point, or the first coordinate of a Polygon geometry type;distance- only when querytype==closest, this value will be present, and indicates the distance, in meters, the locations is from your queried coordinates;locationRemarksDWC - any notes associated with this Location recorddecimalLatitudeDWC - depends on the adm_level: if adm_level<=99, the latitude of the centroid; if adm_level == 999 (point), its latitude; if adm_level==100 (plot) or 101 (transect), but is a POINT geometry, the POINT latitude, else if POLYGON geometry, then the first point of the POLYGON or the LINESTRING geometry.decimalLongitudeDWC - same as for decimalLatitudegeoreferenceRemarksDWC - will contain the explanation about decimalLatitudegeodeticDatum-DWC the geodeticDatum informed for the geometry (ODB does not treat map projections, assumes data is always is WSG84)

Persons Endpoint

The

personsendpoint interact*** with the Person table. The basic usage is getting a list of registered people (individuals and vouchers collectors, taxonomic specialists or database users).

GET request-parameters

id=listreturn only persons having the id or ids provided (exid=1,2,3,10)name=string- return people whose name matches the specified string. You may use asterisk as a wildcard. Ex:name=*ducke*abbrev = string, return people whose abbreviation matches the specified string. You may use asterisk as a wildcard.email=string, return people whose e-mail matches the specified string. You may use asterisk as a wildcard.search=string, return people whose name, abbreviation or e-mail matches the specified string. You may use asterisk as a wildcard.limitandoffsetare SQL statements to limit the amount of data when trying to download a large number of measurements, as the request may fail due to memory constraints. See Common parameters.

Response fields

id- the id of the person in the persons table (a local database id)full_name- the person name;abbreviation- the person name (this are UNIQUE values in a OpenDataBio database)email- the email, if registered or person is userinstitution- the persons institution, if registerednotes- any registered notes;biocollection- the name of the Biological Collection (Biocollections, etc) that the person is associated with; not included in simple)

Projects EndPoint

The

projectsendpoint interact with the projects table. The basic usage is getting the registered Projects.

GET request-parameters

id=listreturn only projects having the id or ids provided (exid=1,2,3,10)

Response fields

id- the id of the Project in the projects table (a local database id)fullname- project nameprivacyLevel- the access level for individuals and vouchers in ProjectcontactEmail- the project administrator emailindividuals_count- the number of individuals in the projectvouchers_count- the number of vouchers in the project

Taxons Endpoint

The

taxonsendpoint interact with the taxons table. The basic usage is getting a list of registered taxonomic names.

GET request-parameters

id=listreturn only taxons having the id or ids provided (exid=1,2,3,10)name=searchreturns only taxons with fullname (no authors) matching the search string. You may use asterisk as a wildcard.root=numberreturns the taxon for the specified id along with all of its descendantslevel=numberreturn only taxons for the specified taxon level.valid=1return only valid namesexternal=1return the Tropicos, IPNI, MycoBank, ZOOBANK or GBIF reference numbers. You need to specifyexternalrefsin the field list to return them!project=mixed- id or name of project (may be a list) return the taxons belonging to one or more Projectsdataset=mixed- id or name of a dataset (may be a list) return the taxons belonging to one or more Datasetslimitandoffsetare SQL statements to limit the amount. See Common parameters.

Notice that id, name and root should not be combined.

Response fields

id- this ODB id for this Taxon record in the taxons tablesenior_id- if invalid this ODB identifier of the valid synonym for this taxon (acceptedNameUsage) - only when taxonomicStatus == ‘invalid’parent_id- the id of the parent taxonauthor_id- the id of the person that defined the taxon for unpublished names (having an author_id means the taxon is unpublished)scientificNameDWC - the full taxonomic name without authors (i.e. including genus name and epithet for species name)scientificNameIDDWC - nomenclatural databases ids, if any external reference is stored for this Taxon recordtaxonRankDWC - the string value of the taxon ranklevel- the ODB numeric value of the taxon rankscientificNameAuthorshipDWC - the taxon authorship. For taxonomicStatus unpublished: will be a ODB registered Person namenamePublishedIn- unified bibliographic reference (i.e. either the short format or an extract of the bibtext reference assigned). This will be mostly retrieved from nomenclatural databases; Taxon linked references can be extracted with the BibReference endpoint.taxonomicStatusDWC - one of ‘accepted’, ‘invalid’ or ‘unpublished’; if invalid, fields senior_id and acceptedNameUsage* will be filledparentNameUsageDWC - the name of the parent taxon, if species, the genus, if genus, family, and so onfamilyDWC - the family name if taxonRank family or belowhigherClassificationDWC - the full taxonomic hierarchical classification, pipe separated (will include only Taxons registered in this database)acceptedNameUsageDWC - if taxonomicStatus invalid the valid scientificName for this TaxonacceptedNameUsageIDDWC - if taxonomicStatus invalid the scientificNameID ids of the valid TaxontaxonRemarksDWC - any note the taxon record may havebasisOfRecordDWC - will always be ’taxon’externalrefs- the Tropicos, IPNI, MycoBank, ZOOBANK or GBIF reference numbers

Traits Endpoint

The

traitsendpoint interact with the Trait table. The basic usage is getting a list of variables and variables categories for importing Measurements.

GET request-parameters

id=listreturn only traits having the id or ids provided (exid=1,2,3,10);name=stringreturn only traits having theexport_nameas indicated (exname=DBH)categories- if true return the categories for categorical traitslanguage=mixedreturn name and descriptions of both trait and categories in the specified language. Values may be ’language_id’, ’language_code’ or ’language_name’;bibreference=boolean- if true, include the BibReference associated with the trait in the results;limitandoffsetare SQL statements to limit the amount. See Common parameters.

Response fields

id- the id of the Trait in the odbtraits table (a local database id)type- the numeric code defining the Trait typetypename- the name of the Trait typeexport_name- the export name valuemeasurementTypeDWC - same as export_name for DWC compatibilitymeasurementMethodDWC - combine name, description and categories if apply (included in the Measurement GET API, for DWC compatibility)measurementUnit- the unit of measurement for Quantitative traitsmeasurementTypeBibkeys- the bibkeys of the bibliographic references associated with the trait definition, separated by pipe ‘|’taggedWith- the name of the tags or keywords associated with the trait definition, separated by pipe ‘|’range_min- the minimum allowed value for Quantitative traitsrange_max- the maximum allowed value for Quantitative traitslink_type- if Link type trait, the class of the object the trait links to (currently only Taxon)name- the trait name in the language requested or in the default languagedescription- the trait description in the language requested or in the default languagevalue_length- the length of values allowed for Spectral trait typesobjects- the types of object the trait may be used for, separated by pipe ‘|’categories- each category is given for Categorical and Ordinal traits, with the following fields (the categoryid,name,descriptionandrank). Ranks are meaningfull only for ORDINAL traits, but reported for all categorical traits.

Vouchers Endpoint

The

vouchersendpoints interact with the Voucher table. Their basic usage is getting data from Voucher specimens

GET parameters

id=listreturn only vouchers having the id or ids provided (exid=1,2,3,10)number=stringreturns only vouchers for the informed collector number (but is a string and may contain non-numeric codes)collector=mixedone of id or ids or abbreviations, returns only vouchers for the informed main collectordataset=list- one of id or ids list, name or names list, return all vouchers directly or indirectly related to the datasets informed.project=mixedone of ids or names, returns only the vouchers for datasets belonging to the Project informed.location=mixedone of ids or names; (1) ifindividual_tagis also requested returns only vouchers for those individuals (or use “individual=*” to get all vouchers for any individual collected at the location); (2) ifindividualandindividual_tagare not informed, then returns vouchers linked to locations and to the individuals at the locations.location_root=mixed- same as location, but include also the vouchers for the descendants of the locations informed. e.g. “location_root=Manaus” to get any voucher collected within the Manaus administrative area;individual=mixedeither a individual_id or a list of ids, or*- returns only vouchers for the informed individuals; when “individual=*” then location must be informed, see above;taxon=mixedone of ids or names, returns only vouchers for the informed taxons. This could be either vouchers referred as parent of the requested taxon or vouchers of individuals of the requested taxons.taxon_root=mixed- same as taxon, but will include in the return also the vouchers for the descendants of the taxons informed. e.g. “taxon_root=Lauraceae” to get any Lauraceae voucher;

Notice that some search fields (taxon, location, project and collector) may be specified as names - abbreviation, fullnames and emails in the case of collector - (eg, “taxon=Euterpe edulis”) or as database ids. If a list is specified for one of these fields, all items of the list must be of the same type.

Response fields

id- the Voucher ODB id in the vouchers table (local database id)basisOfRecordDWC - will be always ‘preservedSpecimen’ [dwc location](DWCoccurrenceIDDWC - a unique identifier for the Voucher record - combine organismID with biocollection infoorganismIDDWC - a unique identifier for the Individual the Voucher belongs toindividual_id- the ODB id for the Individual the Voucher belongs tocollectionCodeDWC - the Biocollection acronym where the Voucher is depositedcatalogNumberDWC - the Biocollection number or code for the VouchertypeStatusDWC - if the Voucher represent a nomenclatural typerecordedByDWC - collectors pipe “|” separated list of registered Persons abbreviations that collected the vouchersrecordedByMain- the first person in recordedBy, the main collectorrecordNumberDWC - an identifier for the Voucher, generaly the Collector Number valuerecordedDateDWC - the record date, collection datescientificNameDWC - the current taxonomic identification of the individual (no authors) or “unidentified”scientificNameAuthorshipDWC - the taxon authorship. For taxonomicStatus unpublished: will be a ODB registered Person namefamilyDWCgenusDWCidentificationQualifierDWC - identification name modifiers cf. aff. s.l., etc.identifiedByDWC - the Person identifying the scientificName of this recorddateIdentifiedDWC - when the identification was made (may be incomplete, with 00 in the month and or day position)identificationRemarksDWC - any notes associated with the identificationlocationName- the location name for the organismID the voucher belongs to (if plot the plot name, if point the point name, …)higherGeographyDWC - the parent LocationName ‘|’ separated (e.g. Brasil | Amazonas | Rio Preto da Eva | Fazenda Esteio | Reserva km37);decimalLatitudeDWC - depends on the location adm_level and the individual X and Y, or Angle and Distance attributes, which are used to calculate these global coordinates for the record; if individual has multiple locations (a monitored bird), the location closest to the voucher date is obtaineddecimalLongitudeDWC - same as for decimalLatitudeoccurrenceRemarksDWC - text note associated with this recordassociatedMediaDWC - urls to ODB media files associated with the recorddatasetName- the name of the ODB Dataset to which the record belongs to DWCaccessRights- the ODB Dataset access privacy setting - DWCbibliographicCitation- the ODB Dataset citation - DWClicense- the ODB Dataset license - DWC

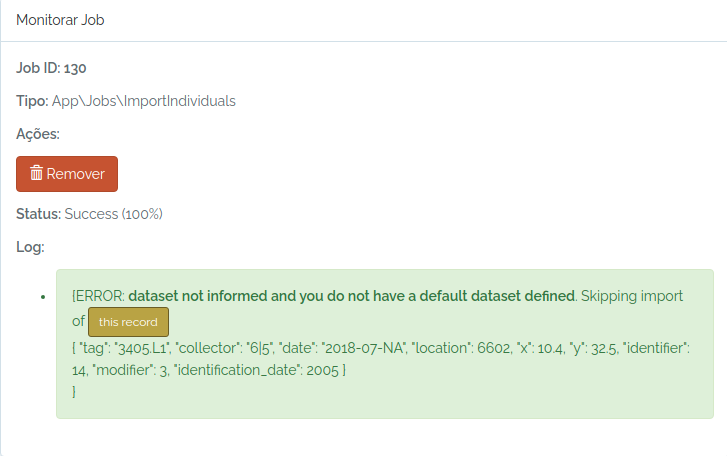

Jobs Endpoint

The

jobsendpoints interact with the UserJobs table. The basic usage is getting a list of submitted data import jobs, along with a status message and logs. You can also get data from jobs produced using thesave_job=1parameter, or export jobs created using the web interface (with the csv option only)

GET parameters

status=stringreturn only jobs for the specified status: “Submitted”, “Processing”, “Success”, “Failed” or “Cancelled”;id=list- the job id or ids ;get_file- ifget_file=1, then you can get the data file saved by the job. This needs also a singleidparameter. Useful, when getting data with thesave_job=1option.

Response fields

id- the job idstatus- the status of the jobdispatcher- the type of the job, e.g, ImportTaxons;log- the job log messages, usually indicating whether the resources were successfully imported, or whether errors occurred; others.

Possible errors

This should be an extensive list of error codes that you can receive while using the API. If you receive any other error code, please file a bug report!

- Most of the time will be related to memory, if you are trying to get a large dataset, for example. See the get common parameters

- Error 401 - Unauthenticated. Currently not implemented. You may receive this error if you attempt to access some protected resources but didn’t provide an API token.

- Error 403 - Unauthorized. You may receive this error if you attempt to import or edit some protected resources, and either didn’t provide an API token or your user does not have enough privileges.

- Error 404 - The resource you attempted to see is not available. Note that you can receive this code if your user is not allowed to see a given resource.

- Error 413 - Request Entity Too Large. You may be attempting to send a very large import, in which case you might want to break it down in smaller pieces.

- Error 429 - Too many attempts. Wait one minute and try again.

- Error 500 - Internal server error. This indicates a problem with the server code. Please file a bug report with details of your request.

3.3 - POST data

-

The OpenDataBio R package is a client for this API.

-

Importing data from files through the web-interface require specifying the POST parameters here specified

-

Batch imports of Bibliographic References and MediaFiles are possible only through the web interface.

-

Authentication token is required for all POST

POST Individuals

Request fields allowed when importing individuals: